Operating Systems 2014F Lecture 10

The audio from the lecture given on October 8, 2014 is now available.

Assignment 5 visit:

By using base 16 vs. base 10 - yes the alphabet is larger. when you talk about hex vs. decimal, you are changing how we read it. There's something more correct about using hex vs. base 10. A Hexadecimal digit represents 4 bits. How many bits does base 10 represent? (somewhere between 8 and 16 - a fractional bit) it's messy. Decimal is not the native base of a computer. Base 16 maps perfectly to base 2. That's why you also see octal - 3 bits vs. 4 bits. (but a reduced range), when you use hexadecimal it represents 4 bits, and is a bit cleaner.

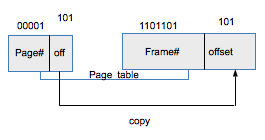

When you say offset is a power of two, the page size is also a power of two. the storage used to encode the page size determines the range of the offset. It is a specific byte in a page. What is the range of bytes that can go in a page. Classic x86

what is the range of offsets of a 4 k page? The offsets are 0 - 4095. Another thing that you will need for the assignment, it's a question that refers to Peterson's algorithm. It doesn't work on modern machines. The big idea is the memory hierarchy. Key assumption: when one processor writes to memory the other processors will see that write, that value. Is that true in modern systems? No.

Concurrency Mechanisms:

locks condition variables Semaphores

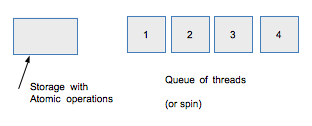

you build all of these using the same mechanisms. Let's you check a value and change it in one atomic operation. The basic functionality of the hardware. The semantics are different in these higher level constructs. When do you use what? What are the purpose of these things? there's huge amounts of overlap. what you are talking about with these things, is that you have some sort of storage that has atomic operations so you can check it's value adn change it, all at one time (so you don't have race conditions when you manipulate the value) then you have a queue of threads.

The difference between these, is related to how you deal with the interaction between the storage and the queue of threads. IF you have a lock, grab lock, and release the lock, does releasing the lock always succeed? Yes, if you have the lock, and you release it, it always succeeds. grab lock can block. when we talk about blocking, it's going to sit there and wait for the condition to happen, nothing is going to happen to that thread until that condition is met. You often have a try lock, try lock means check to see if the lock is grabbable or not. it will grab the lock if it's available, but if it's not available it will return, it's non-blocking, because maybe you don't want to sit there and block. Most of the time you will try to grab the lock and if the lock fails, it will wait until grabbing the lock succeeds.

what if 5 processes try to grab the lock all at once, what do the rest do? they are all blocked, there are two ways for them to wait. One is to just go into an infinite loop, it just keeps trying trying trying until it succeeds. It is sometimes a good idea, this is called a spin lock. If the CPU with running the spin lock, doing nothing else, it is useless. Not very good for making use of efficient resources. You expect the time for you to wait is very small. no one is going to be using hte lock structure for very long. it's better to sit there and wait. It's like if you go to a gov't office, and you want something to happen, you will probably have to wait in line. When you are spin locking, you aresitting in the queue doing nothing. What you can also do instead is you can have a wait queue: in here we say, they queue up, they wait, you are freeing up the resources to do something else. the particular processor running the thread, it encounters things behind it, it puts that thread to sleep. what does sleep mean? Sleep means, the thread goes to sleep that implies something about the scheduling of the thread. IT is kicked off the cpu it's running on. I am not going to consume any resources right now, when you wake up, in biological terms, it's still consuming resources. It's still consuming space resources (still consuming ram, still living in cache but it is not being actively run by a core. When you say a thread yields, I don't need this CPU anymore. when you call sleep you are making a system call that says, I don't want to do anything for at least 10 seconds. You are taken out of the list of running processes, you are put on the list of block processes, and you wait for the timer to go off. Then your process is brought back in and is allowed to run. It depends on the time of the CPU. In general you should sleep at least for that amount of time.

When we are talking about locks we are talking about multiple threads. Processes do not have shared storage. It's logically separate copies. For threads they share this address space, and use this to coordinate their operations. The lock is not available, it queues up waiting for the lock. You have these threads lined up waiting for the service

Wait = sleep

if it is actually actively waiting running on the cpu that is it spinning, you don't queue up with spinning, because it's every core is just sitting there spinning. For 5 threads to be blocked on a spin lock, all 5 threads are running on 5 cores. You don't use spin locks on things you expect to wait long for. Sometimes you want to use a spin lock. Because it takes effort to put it to sleep. It's a relatively expensive thing to put a thread to sleep, and then have it wake up. When you put a thread to sleep it potentially can involve a context switch, it depends on how threads are implemented. It involves a system call, because then you call the sleep(). If you are going to be waiting less than the time it takes to execute 10 - 50 instructions, you want to use a spin lock.

with a lock all the threads queue up, then when the one that was active gets done. It's a fifo queue. They take their turns.

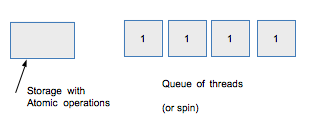

A condition variable has the same basic idea. we have a condition that we want to check and we have a wait queue, but the difference is, is in how we treat the queue. I'm waiting for something to happen, a key to be pressed / waiting for something to come in for the network, waiting for something to happen, the other threads too have gotten so far in the computation, what you are doing is check condition (blocking), check condition (non-blocking) and set condition. - seems kind of the same as a lock. What's really happening here is the normal expected thing when you check for the condition, is that you normally do the blocking one. i'm going to sleep until the condition has happened. The set condition says that it happened. The difference lies in how you treat the queue. with a lock you are waiting for exclusive access to something (an entire data structure, a tree or something) Only one thread can access the resource at a time, enforcing mutual exclusion, we want serial semantics. With a condition variable we are not trying to enforce serial semantics. Tell everyone! When the condition becomes true, everyone in the queue wakes up and gets service. Mechanism basic pieces underlying is the same but the semantics are different. Think of a club, are the doors open? Everyone flows in. Instead of sitting at the passport office, 1 person goes up, another person goes up. In a concurrent appplication you will use these different constructs. It's generalyl about protecting access to a datastructure. where as a condition variable is about waiting for some state of computation / state of the environment has come to pass. In the locks its saying can I get exclusive access to the resource? Normally in code that uses locks, there will be some mutex. (mutual exclusion) binary semaphore

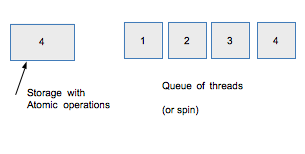

semaphores : up / down a general counting semaphore the value can be any integer. You can use semaphores to implement both condition variables and locks. The idea of a semaphore is: down blocks only if it is less than 0

when you say up, you increment it back up. A lock in this case, is if you set the value to 1. Instead of saying 1 can be present, really a semaphore is like a bathroom, 4 people can use the bathroom at a time. When someone goes in, someone's come out, now someone can go back in, if the room is empty, there is a certain number of resources, you can go in, but if it fills up, everyone is waiting. In practice if you are looking at how people build things. People use mutexs all the time condition variables have clear semantics, semaphores are more of a building block, I want to only have so many threads running at the same time. Its' much harder to reason about. You have to be very careful with your downs and ups. They are confusing but you should know about what they are.

What's in the midterm, the basic coverage of the midterm is the assignments and the tutorials, with a focus on the assignments. The key thing with pthreads, it's supposed to be portable across unix like systems. The exact system calls used for this can vary. when you do pthreads, it's doing the low level assembly. that's present as well. it's a mix of things, it can be implemented in user space partly and partly in the kernel. The mapping between the lowl evel mechanisms and the api is non-trivial. When you look at the code, it's surprisingly complex. Through to assignment 5 is going to be on the midterm. If you look at assignment 5, it has bits and pieces of the lectures last week and this week.

producer / consumer - you should think of a pipe. On the command line you could do these things like: sort foo | uniq

it will sort the lines of the file, and the thing uniq will remove any duplicates of the lines. If I had another command to add in linebreaks for every word. pipe operator means :take stdout from one program, and provide it as stdin for the following program.

We are talking about processes, the one on the left side of the pipe is the producer. The one on the right is the consumer. The pipe operation does not work on a file, it works on processes. ls you always think of as terminating, but you could do something that produces never ending values.

tail is runnign continuously, is this thing constantly cycling just waiting? is there input? no, there's not, is it every character that comes out of tail, does each character get processed by grep, is that how these are interacting? that would be dumb, we want these to be coordinated, but we don't want it to have to be, that every byte in the kernel it has to control the relative rates of how fast these run.

cat /dev/random | sort | uniq

tail -f /var/log/syslog | grep anil

Coordination problem, the producer could run faster, or the consumer runs faster. If the producer runs too fast for the consumer, you may want to to them to sleep. If the consumer runs too fast, you want it to run for a while and put it to sleep until you can let the producer to produce something.

Tail should not keep running, unless grep can keep up. this is the producer consumer pattern. in order for it to work you have to use condition variables and locks. The key idea here is that you have some storage between these. You have instead a buffer, the buffer can be arbitrarily sized, it can grow and shrink, you let the producer produce for a while and the consumer consume for a while. A buffer is a circular list, when you get to the end you start at the beginning.

Guarantee exclusive access, you have to have a mutex to ensure they don't step on each other. (for the entire data structure) - buffer make sure both aren't messing with this at the same time.