DistOS-2011W NTP

Introduction:Context/Background

Network Time Protocol (NTP) synchronizes clocks of hosts and routers. According to NIST estimates there are 10-20 million NTP servers and clients deployed in the internet and its tributaries all over the world. NTP software has been ported to almost every workstation and server platform available today – from PCs to Cray’s – UNIX, Windows, VMS and embedded systems, even home routers and battery backup systems.

NTP provides nominal accuracies of low tens of milliseconds on WANs, sub milliseconds on LANs and sub microseconds using a precision time source such as cesium oscillator or GPS receiver. Our time protocol was designed over the LAN and our goal was to achieve sub milliseconds of accuracy.

Importance

Precision of time is very important in the computer world. Following are some example why it is so important to maintain a precise and accurate time.

Network monitoring, measurement and control, Distributed database transaction journaling and logging, Secure document timestamps with cryptographic certification, Radio and TV programming lunch and monitoring, Stock market buy and sell orders, Distributed network gaming and training, etc.

Definition of the problem

My goal was to acquire time accuracy in the Linux lab assuming that all the computers do not have internet connection. To time synchronize all the computers I had to build our own hierarchy of NTP servers and clients.

Summary of the result

An efficient hierarchy of NTP server and client has been implemented. Precision of Sub milliseconds were achieved.

Outline of the report

Detailed background information and the structure of our time protocol are provided in Section 2. The development setup and code explanation is reviewed in Section 3. Details of which server/client contains which files are given in section 4. My contributions in this implementation are described in Section 5.

Detailed context/background information

In this section the background information of Network Time protocol and the hierarchical model and the comparison with the original NTP designed by Dave Mills is reviewed. For unambiguity I will call the protocol as Linux lab time protocol (LLTP) and the original Network time protocol as NTP.

NTP timestamps

The 64-bit timestamps used by NTP consist of a 32-bit second part and a 32-bit fractional second part. I followed the same time structure for our LLTP which gives us a time scale of 232 seconds (136 years) and a theoretical resolution of 2-32 seconds (233 picoseconds).

Structure

NTP uses a hierarchical, semi-layered system of levels of clock sources; each level of this hierarchy is termed a stratum and assigned a layer number starting with 0 at the top. The stratum level defines its distance from the reference clock and exists to prevent cyclical dependencies in hierarchy.

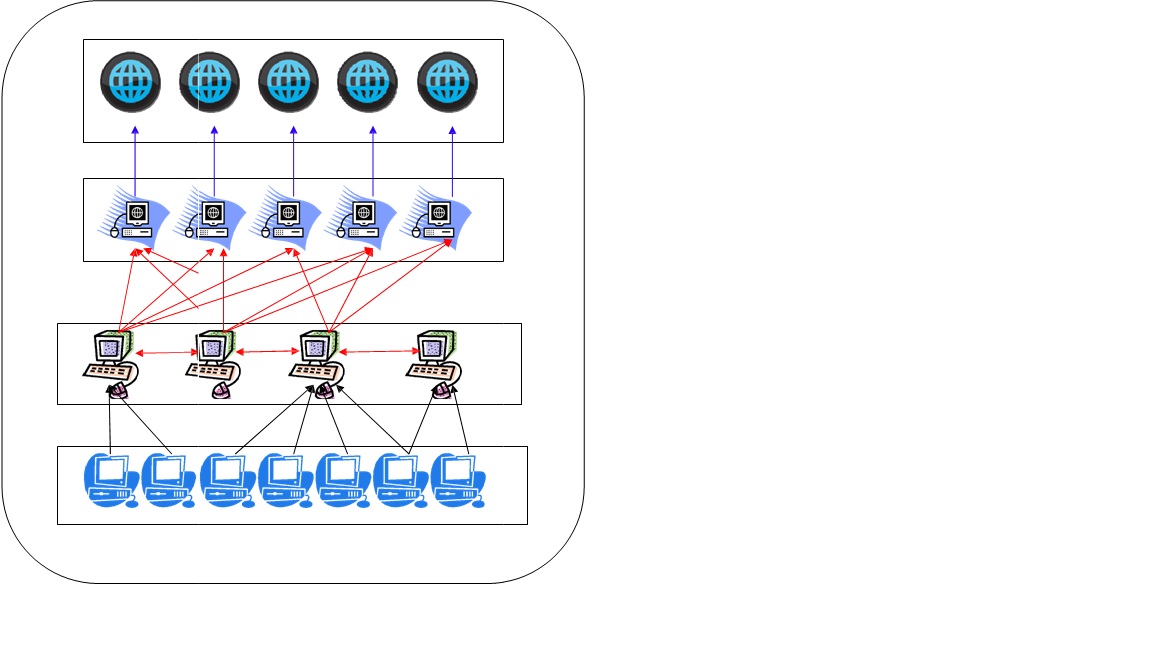

My target is to develop a time synchronizing software for a small office or lab with a large number of computer where a very few computer has internet connectivity but all the computers are connected together in a local network. As a result not all the computer is able to connect to internet for time accuracy. LLTP uses similar hierarchical, semi-layered sources as NTP. Each layer of this hierarchy is termed as level and assigned a layer number starting with 1.

Level 1 server

Computers with internet connectivity are the level 1 server for our LLTP

Level 1 server

synchronizes its system clock with the NTP servers on the internet. In this hierarchy, only a fixed (5) number of level 1 servers are used. Unlike NTP we don’t have the privilege to use a 10 of thousands of level 1 and level 2 servers, and unlike NTP our LLTP has only 3 level structures.

Picture below shows the connectivity and the hierarchy of LLTP

Level 2 server and client

Level 2 servers run two different programs. A client program to synchronize its system clock with the level1 servers and a server program that waits for any level 3 client for time request. Upon the request it sends its synchronized system time to the client. Level 2 servers also synchronize its system clock with the other level2 servers.

Each level2 server is connected to 4 level1 servers. It polls time from each level1 server and chooses the best time and synchronizes its system clock. How the best time is achieved is described in the section 3 below with code explanation. There are maximum 5 level2 servers with our LLTP model. Each level2 server is connected to 4 different level1 servers. None of the level2 server has the same level1 server set.

Level 3 client

Bottom of the LLTP hierarchy is level3 client. It only runs a client program that polls time from the level2 servers. It can only connect/request time from one of our level2 servers at a time. It also requires the knowledge of the IP address of the level2 server. For the load management purpose level 3 clients are not able to connect to a Level1 servers.

Development Details

Server

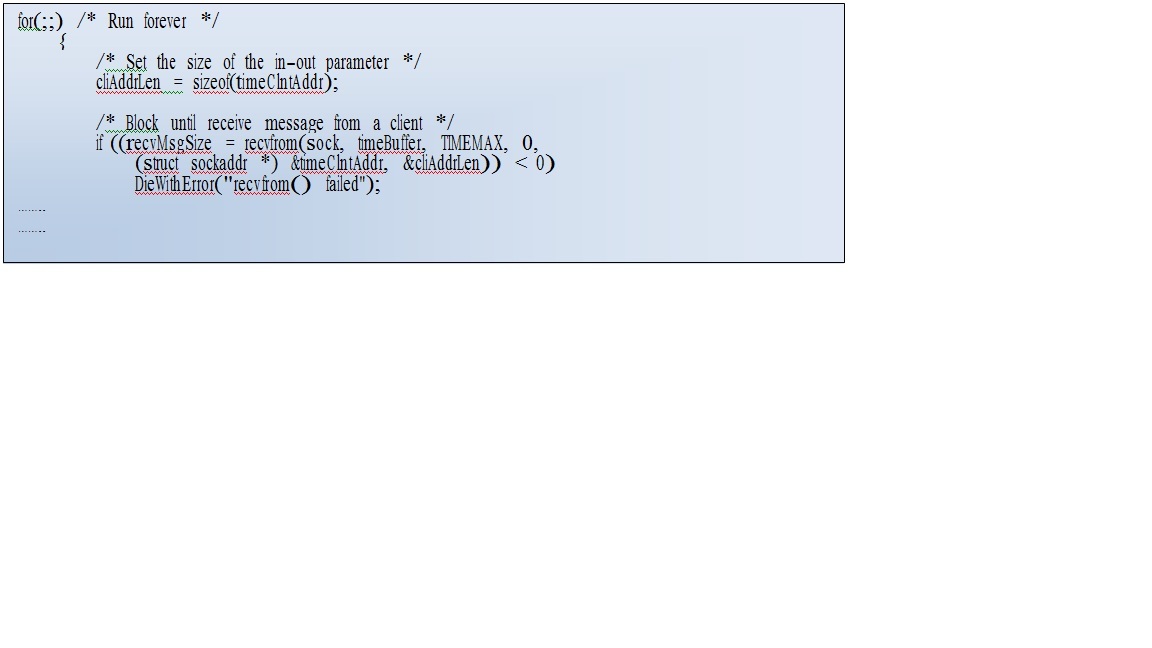

As it is mentioned in section-2, there are two servers in our LLTP model. One is level 1 and the other is level 2. Both servers wait for a client with time requests and upon receiving one send the time string. Code structure for both the servers is almost similar with minor technical differences which are explained later in this section. Both servers use a infinite for loop for waiting for the client. They use a fixed but different port number for the client connection.

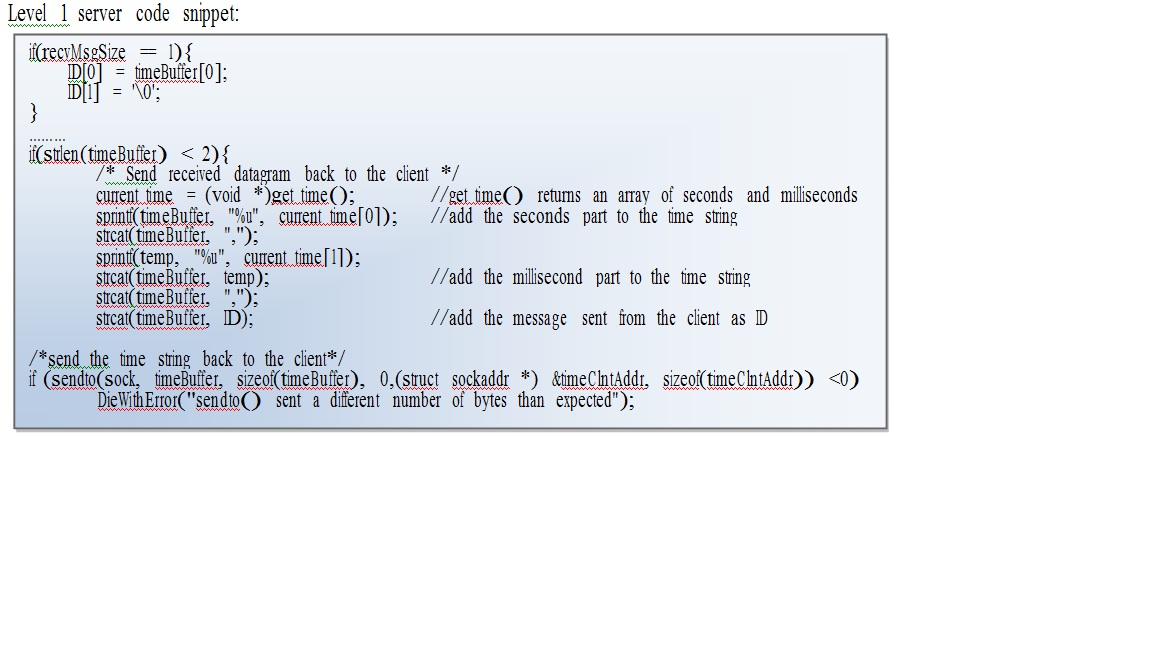

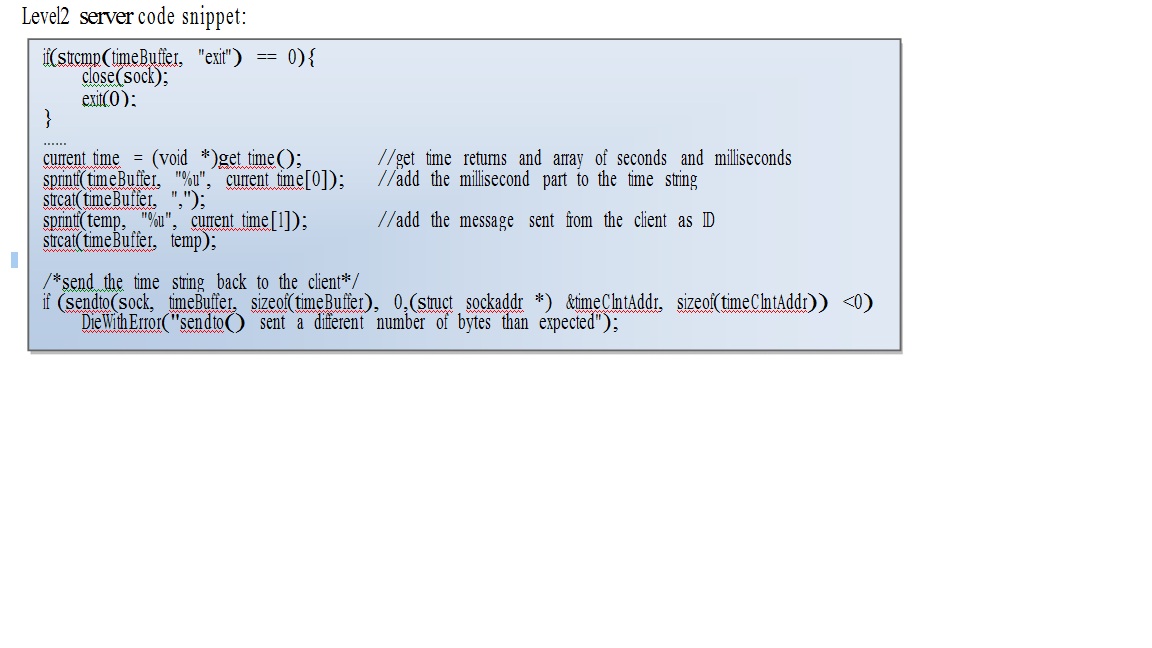

Time requests sent to Level 1 server have to contain a message no more than 1 character and it has to be an integer which is sent by the level2 client to distinguish the level1 servers from one another. Level1 server will take that message received from the client and add that at the end of the time string sent to the client. If the level1 server receives a time request message with greater size of 1than it will not return any time value. For the level2 server the maximum message size is 128 characters and it do not have to be an integer. Level2 server discards the message sent from the level3 client. A unique feature of level2 server is that a level3 client time request with “exit” message can shut down the level2 server. This feature is not implemented in level1 servers.

Both servers send time as a string which handles the problem with big and little Endean.

Client

There are also two clients for our LLTP, level2 client and level3 client. Level3 client is very straight forward where the level3 is a bit more complicated.

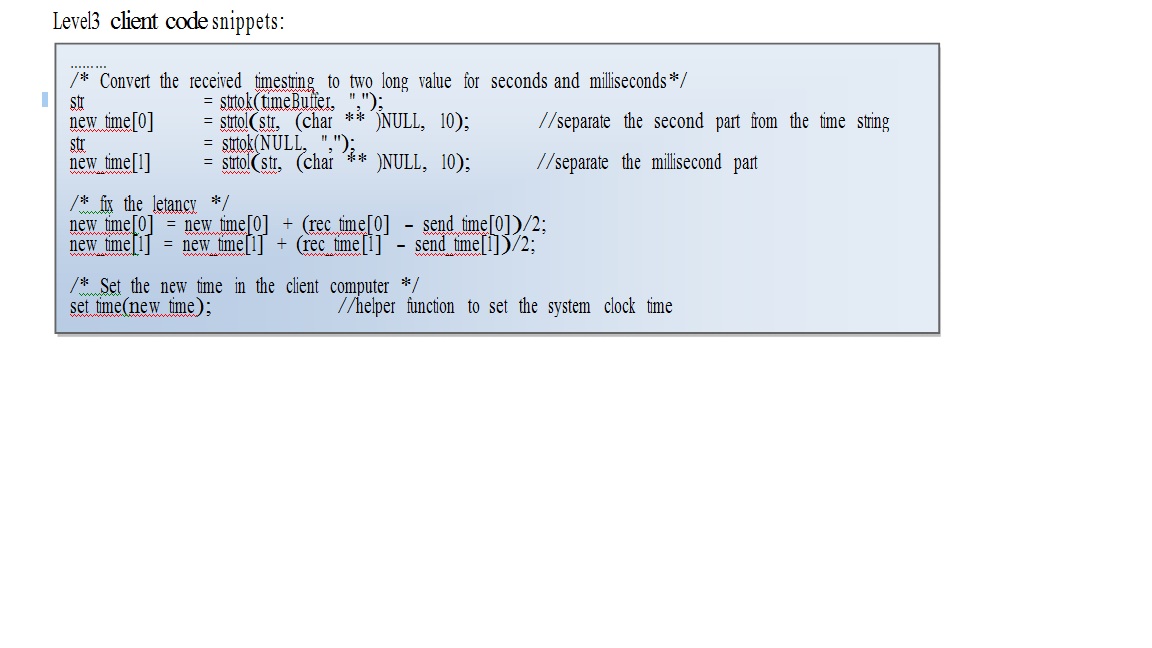

Level3 client sends a time request to the level2 server. It cannot send time request to a level1 server as the port number for both server are different and the port numbers are hard coded for both the client (leve3 client/level2 server uses port 123 where level2 client/level1 server uses port 4003). Level2 server also has a restriction over the message sent by the client which is explained above in the server section. Upon receiving the time string back from the server which contains the seconds and milliseconds part together as a string separated by a comma (‘,’), it separates this two parts and checks for the latency and calls function named set_time(long[] ) which sets the system clock time with the new time.

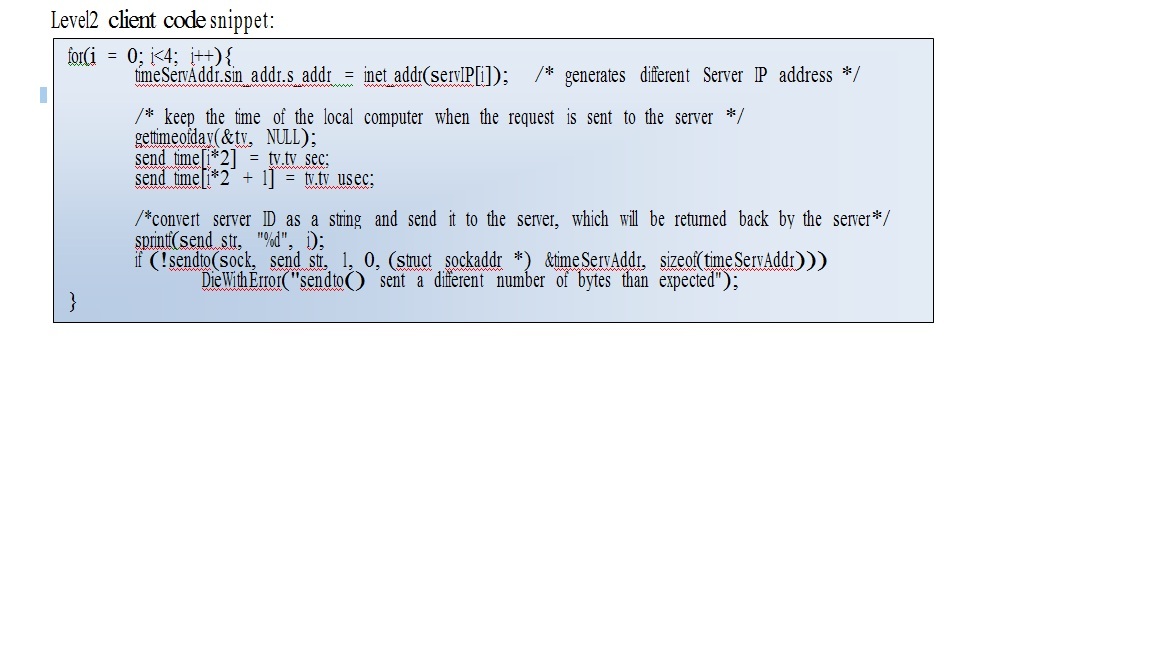

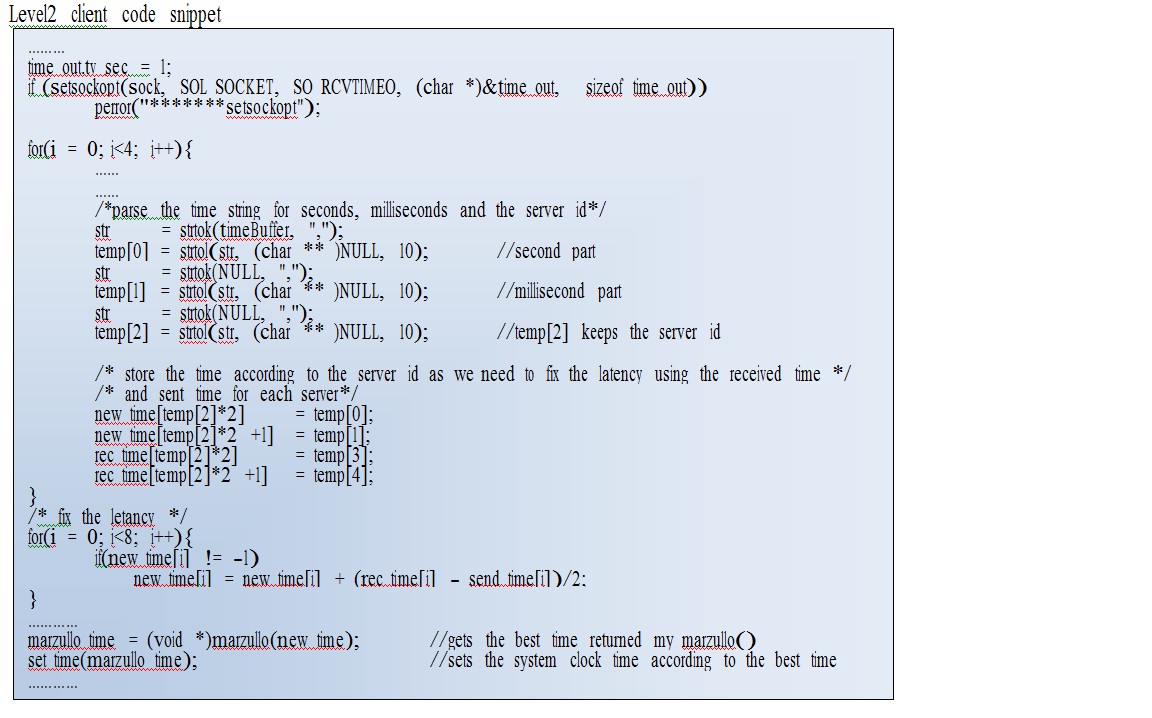

Level2 client dose almost the same things but in a broader and sophisticated way. It polls time from four different levle1 time server at the same time. When it sends the time request, it sends a unique message as the server ID to identify the time received from different level1 server which helps to fix the latency later.

Level2 client also uses a time out of 1 second. If it dose not receive the time string back from the server then it will ignore the time from that server and choose the best time from the ones it received from the other servers. Received time strings from the different servers also have the server id added at the end of the time string. Using that information level2 client saves the received time in order and fixes the latency accordingly. It keeps all the latency fixed time in an array with the invalid time being -1. Then it calls a different function called marzullo(long []), which takes the array of latency fixed times and returns the best time depending on the Marzullo’s algorithm. Function marzullo() is explained in the section 3.4. Then it uses the set_time(long []) method to set the system clock time.

Daemon client and server

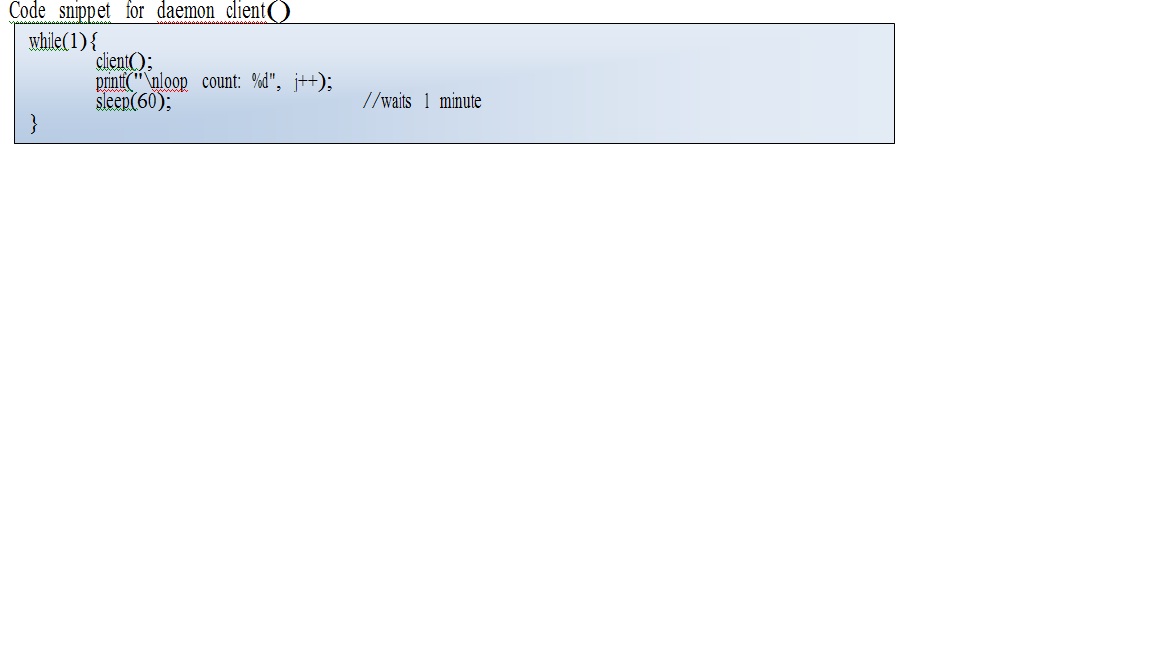

The level2 client and server runs as a daemon process to give them the privilege to run continuously in the background. As both the client and server in level2 does not need any user interference and need to run all the time to update the system clock of the running computer from the level1 server.

As it is mentioned above the client process for level2 run continuously and it updates its system clock every 1 minute (polls time from the level1 server every 60 seconds). Server daemon process is built in the same manner but unlike the client process it does not have a waiting time.

Marzullo’s algorithm and function marzullo(long [])

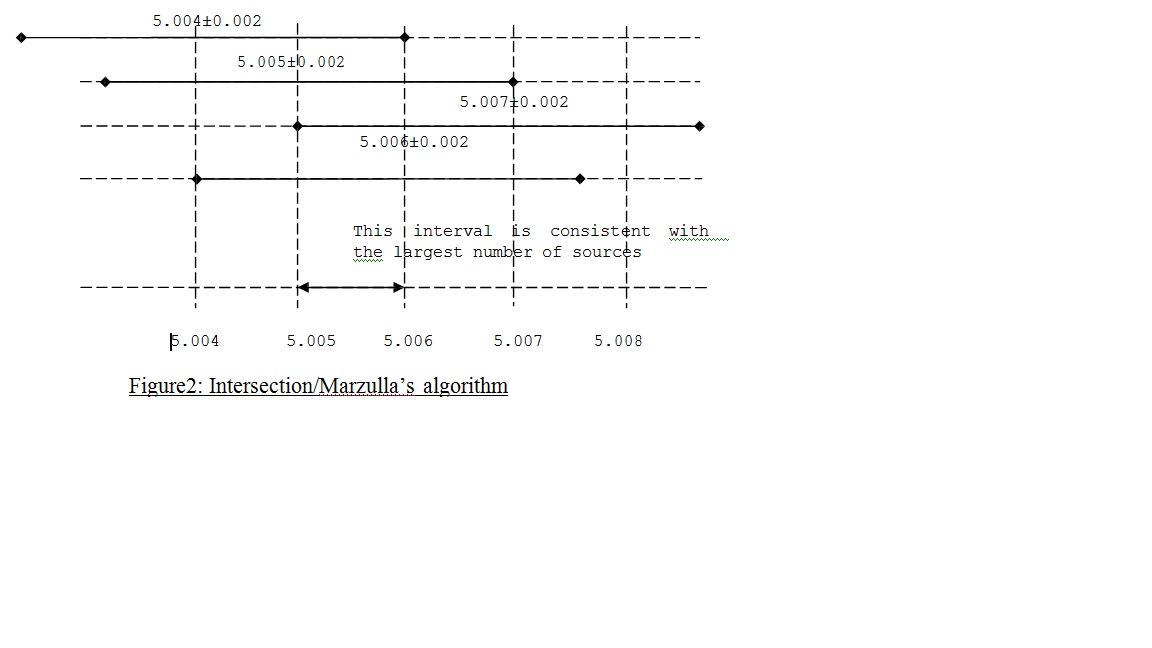

Marzullo’s algorithm, invented by Keith Marzullo, is an agreement algorithm used to select sources for estimating accurate time from a number of noisy time sources. A modified version of his algorithm, intersection algorithm, is use in NTP. We used the simplified version of this algorithm to choose the best possible time from our time sources (level1 servers).

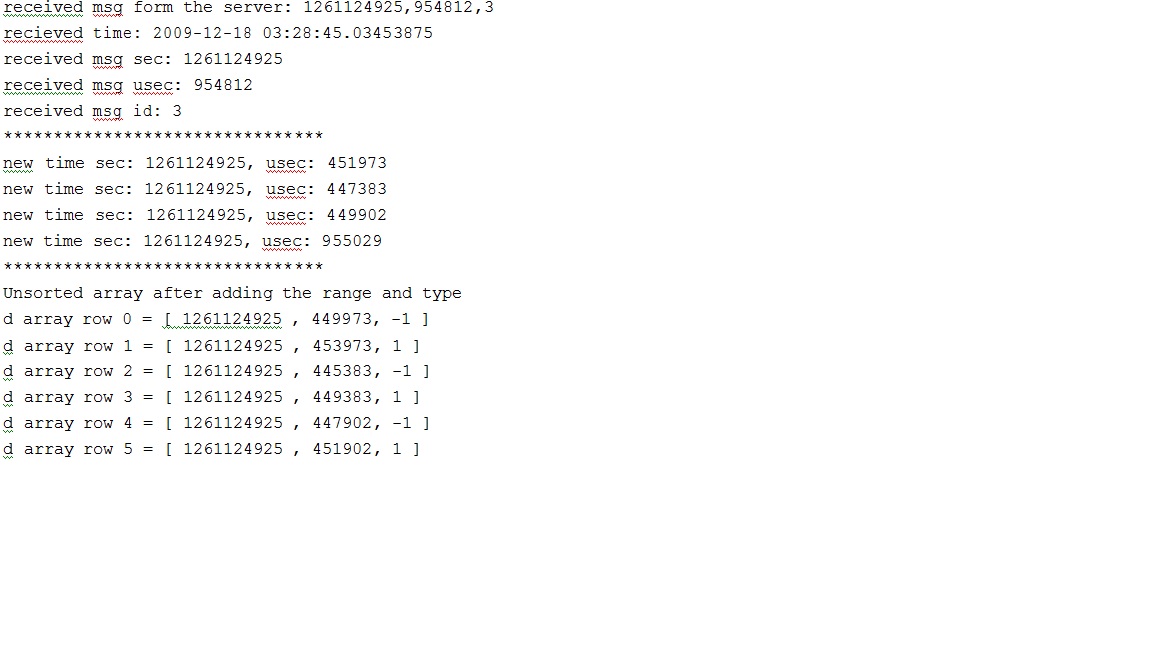

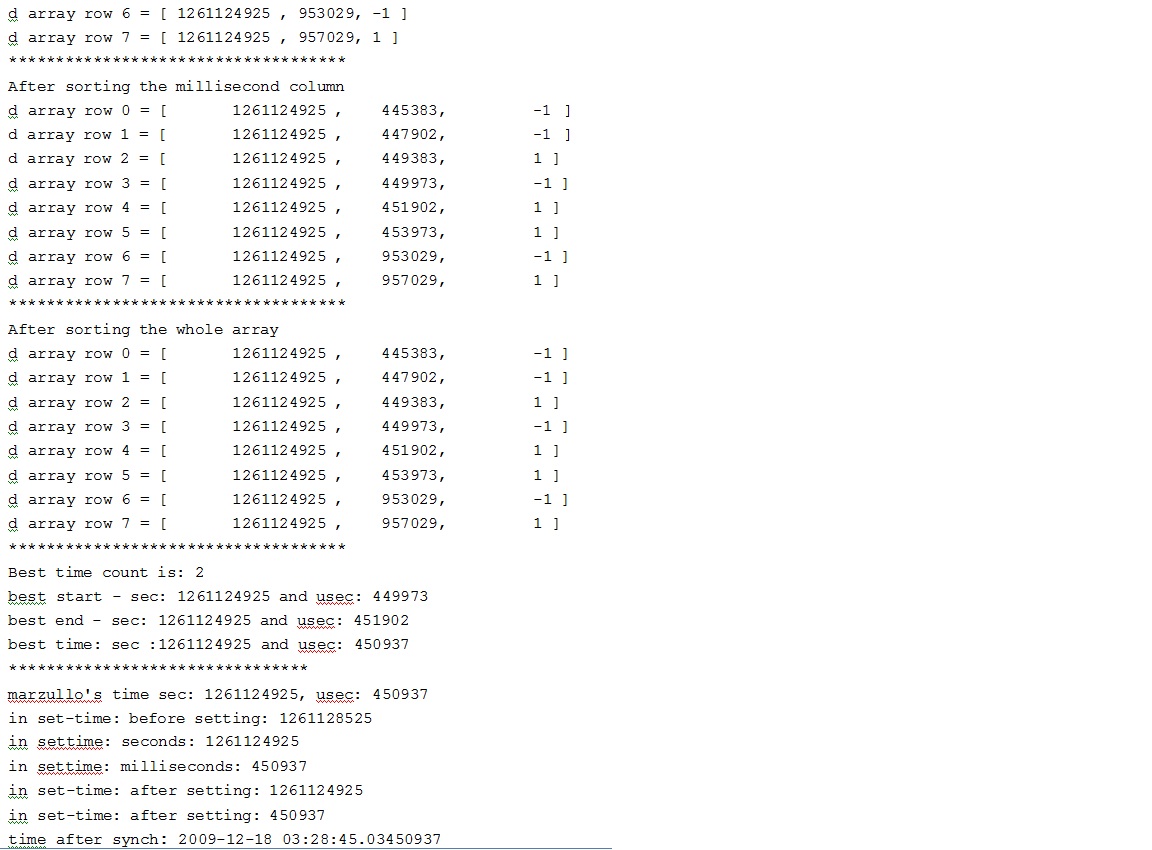

Our function long* marzullo (long []) takes an array of four times from four different level1 servers and return a pointer to an array with the best seconds and milliseconds. First it takes the given array of times and makes two entries for each time as ±2 milliseconds. For each entry it also adds a type of -1 for -2millisecons and +1 for +2 milliseconds. As a result for 4 time entry it produces an array of 8 time entries with type as ±1. For our LLTP we took ±2milliseconds as our error range. After adding the type the entry will look like <entry – 2, -1> for the beginning of the range and <entry+2, +1> for the end of the range [entry±2].

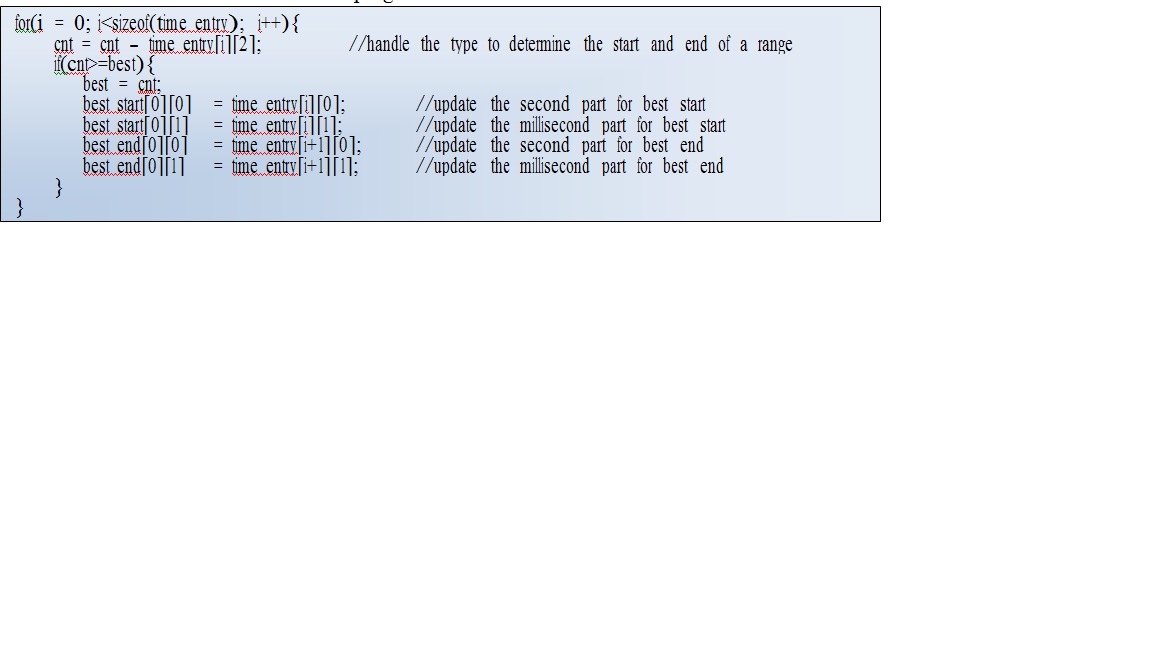

The description of the algorithm uses the following variables: best (largest number of overlapping intervals found), cnt (current number of overlapping intervals), best_start and

best_end (the beginning and end of best interval found so far). We used a two dimensional array to keep the second, millisecond and type of the range. The structure of the array is as follows:

time_entry [i][0] = second part of the time entry time_entry [i][1] = millisecond part of the time entry time_entry [i][2] = type of the range (i.e. ±1)

Here ‘Time_entry is the name of the array and ‘i’ is used as an index to traverse the array. After creating the array it follows the following step to determine the accurate time. Then it sends back the best chosen time to the level2 client program.

List of files

Level1 server:

DieWithError.c

get-time.c

L1Server.c

Level2 server:

daemon_server.c

DieWithError.c

get-time.c

L2Server.c

Level2 client:

daemon_client.c

DieWithError.c

get-time.c

L2Client.c

marzullo.c

print-time.c

selection_sort.c

set-time.c

Level3 client:

DieWithError.c

get-time.c

print-time.c

set-time.c

UDPTimeClient.c

Discussion/Output

The most intresting part of testing this was to see how distrubted architect of NTP client-server works and how could it be improved. I learnt alot by setting up and running them and seeing the results. It was fun improving little bit on exsisting implementation of NTP.

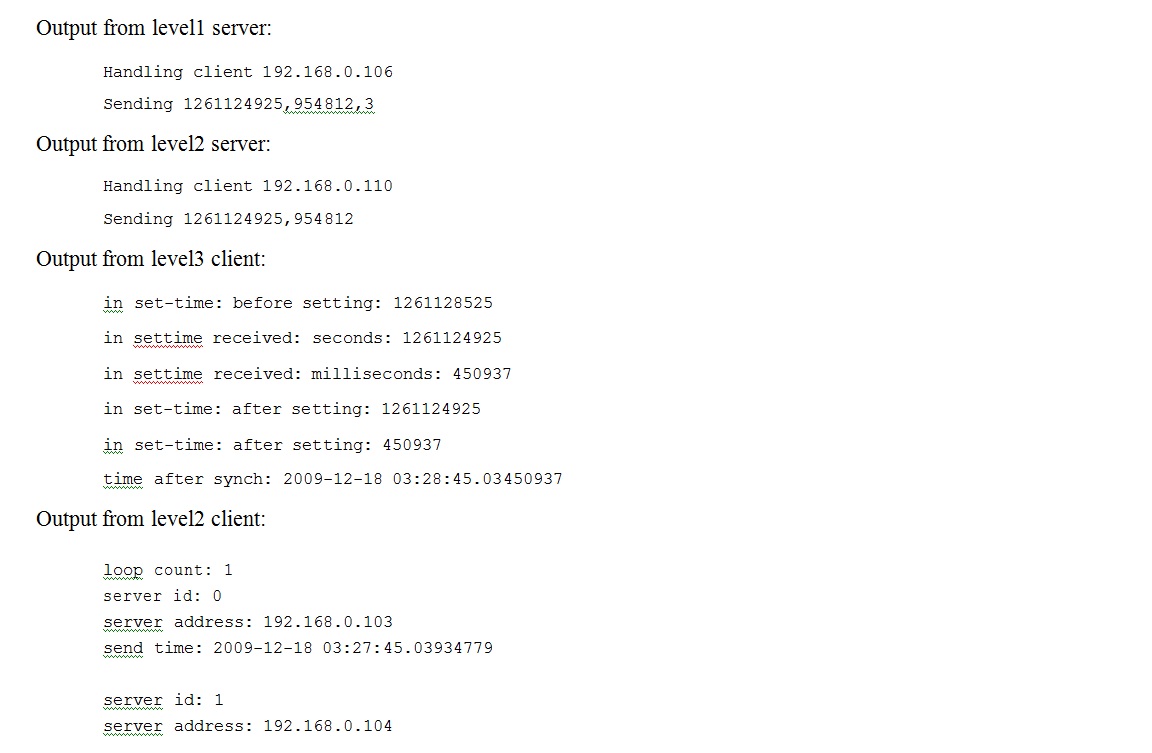

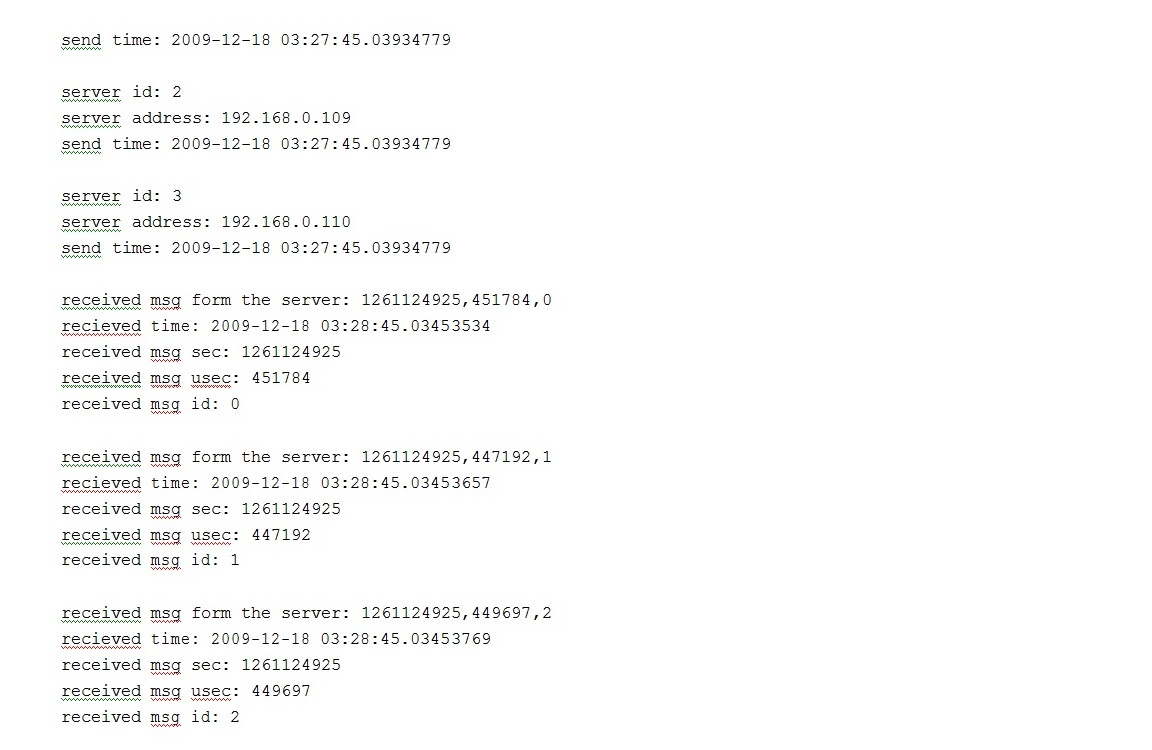

Output form different levels of clients and servers are given in this section. Output from level1 server:

Conclusion

Overall, there has been lots of work going on NTP , due to high demand of distributed clinet-server architect based application. This was carried on a very small linux based lab , in future I guess there will be need to test on large industry based scale , where many systems can be connected and than test to see how the delay from clients to server causes disturbance in algorithm. There will be issues for sure since as the information travels it takes time and that delayed time will need to be adjusted more and will be focus of future work. The most important feature of NTP relevant to this paper is its scalability: its phase lock loop clock discipline is designed for small scale networks so that NTP can maintain low offsets among clocks, even if propagation delays increase significantly.

They main problem of NTP is under heavy load, constantly changing offsets may cause frequent clock re-adjustments by NTP. Each time it re-adjust, packets transmitted at later time may have smaller time stamps than packets transmitted earlier resulting in interfere with path monitoring. Future plan is to Global Positioning System receivers for clock synchronization among edge routers.

Whether its traffic in air or ground or buying selling things carefully coordinated , reliable and accurate time is vital. In some cases ill-gotten time might cause DNS caches to to expire and entire internet to implode on the root servers , which was one of the threat on the eve of millennium in 1999. Critical data files might expire before they are created, and an electronic message might arrive before it was sent. Reliable and accurate computer time is necessary for any real-time distributed computer application, which is what much of our public infrastructure has become.

References

- Ntp home page: http://ntp.org Acessed on 13th Feb 2011

- Wikipedia for network time protocol: http://en.wikipedia.org/wiki/Network_Time_Protocol Accessed on 13th Feb 2011

- Wikipedia for Marzullo’s algorithm: http://en.wikipedia.org/wiki/Marzullo's_algorithm Accessed on 13th Feb 2011

- Linux Home Networking : "How To : NTP Server" : http://www.linuxhomenetworking.com/wiki/index.php/Quick_HOWTO_:_Ch24_:_The_NTP_Server Accessed on 15 Feb 2011