DistOS-2011W Failure Detection in Distributed Systems

Seyyed Sajjadpour

Abstract

Failure detection has been studied for some time now. Failure detection is valuable to distributed systems as it adds to their reliability and increases their usefulness. Hence, it is important for distributed systems to be able to detect and cater to failures when they occur efficiently and accurately [3].

Introduction

In my literature review, I first talk a little about the history of failure detectors in distributed systems, then move on to define the most used/known protocols used to monitor in between local processes, then move on to introducing their drawbacks, and then mention some work done in solving those drawbacks.

History

Some work done in the 90’s was related to solving the consensus problem. Consensus roughly means processors/ units agreeing on a common decision despite failures [12].

In the paper of J. Fischer et al. [7] prove that the fault-tolerant cooperative computing (consensus) cannot be solved in a totally asynchronous model. Asynchronous distributed systems are ones that message transmission rates are unbounded [12, 13]. They conclude that there needs to be models that take more realistic approaches based on assumptions on processors and communication timings. However, Chandra and Toueg [12 from 4] mention that by augmenting the asynchronous system model with failure detectors it would be possible to bypass the impossibility in [7].

In [1], they suggest that an independent failure management system should be such that when it is investigating a service that is not responding, it will contact the Operating system that is running it to obtain further confidence. Their independent failure detector should have the following three functional modules: “ 1. A library that implements simple failure management functionality and provide the API to the complete service. 2. A service implementing per node failure management, combining fault management with other local nodes to exploit locality of communication and failure patterns. 3. An inquiry service closely coupled with the operating system which, upon request, provides information about the state of local participating processes.” [1]

In conclusion of their work, they suggest that failure detection should be a component of the operating system. Most work done after this, tries to go by this.

All failure detection algorithms/schemes use time as a means to identify failure. There are different protocols/ways of using this tool. They vary on how this timeout issue should be addressed, when/where messages should be sent and how often, synchronous or asynchronous. For small-distributed networks, such as LANs etc., coordination and failure detection is simple and does not require much complexity.

Protocols

All failure detection mechanisms use time as a means to identify failure. As mentioned, the two most famous ones are the push and pull strategies. In [3], they also introduce a combination of both push and pull.

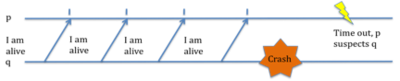

The Push Model

Assuming that we have two processes, p and q. With p being the process that is the monitor. Process q will be sending heartbeat messages every t seconds, hence process p will be expecting a “I am alive message” from q every t seconds. Heartbeat messages are messages that are sent on a timely bases to inform/get informed about a process. If after a timeout period T, p does not receive any messages from q, then it starts suspecting q [3,4]. The figure below is used in both [3] and [4] with a minute difference in each.

As you can see in the figure, process q is sending heartbeat messages periodically. At one point it crashes and it stops sending messages. Process p, waiting for heartbeat messages, at this point does not receive any more messages, and after a timeout period of T, suspects that q has failed.

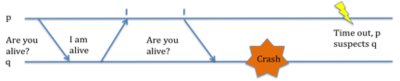

The Pull Model

Assume that we have the same parameters as above. In this model, instead of the process q (the one that has to prove its alive) sending messages to p every few seconds, it sends ‘are you alive?’ messages to q every t seconds, and waits for q to respond ‘yes I am alive’ [3,4]. The figure below is used in both [3] and [4] with a minute difference in each.

In the above figure, p repeatedly checks if q is alive, and if q is alive it will respond with the 'I am alive' heartbeat message. Once q crashes, p does not receive any more responses from q and starts suspecting the failure of q after a timeout T time.

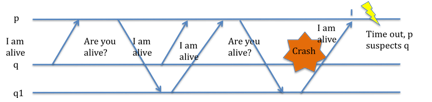

The Dual Scheme

The pull model is somehow inefficient as there are potentially too many messages sent between the processes. The pull model is a bit more efficient in this manner. Hence, in [3] they propose a model that is a mix of the two. During the first message sending phase, any q like process that is being monitored by p, is assumed to use the push model, and hence send “ I am alive” or “liveness” messages to p. After some time, p assumes that any q like process that did not send a liveness message is using the pull model. The figure below is only in [3].

More Complex Methods

Although the above-mentioned protocols can help us in detecting failures in systems, however they do not scale well in large distributed systems. This is due mostly due to bandwidth limitations, message overload etc, hence the scalability problem is the major concern [3]. There have been various different approaches to solving this problem. In my review, I will touch upon the three that I investigated, which are

1) The Hierarchical Method

2) Gossip-style Failure Detection

3) The Accrual Failure Detector

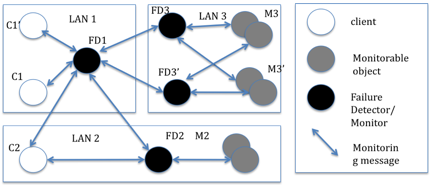

The Hierarchical Model

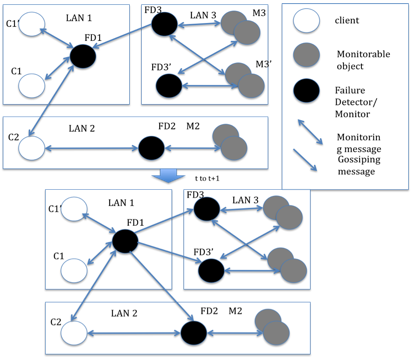

This model is an appropriate model for a LAN. In [3], they introduce three different elements exists (by summary) 1) Clients

2) Monitors

3) Monitorable objects in the hierarchical model. I will illustrate this by the figure below from [3], note I redrew the figure myself to avoid any copyright issues.

In the figure, FD1, FD2, FD3 and FD3’ are all monitors/failure detectors. As you can notice the monitors are not all in the same LAN, however, each monitor only monitors monitorable objects in its own LAN. While monitoring the monitorables, they also notify the clients of the status of the objects they need to know about. This model greatly reduces the amount of messages exchanged in between process. Instead of the clients monitoring every object that is monitorable, monitors do so and notify the clients when necessary or when they ask for it. On top of that, monitors also ask other monitors about their monitorable objects, hence they do not need to communicate with every other monitorable object. Again this reduces the heartbeat messages exchanged. In the given example, even if FD3 or FD3’ fail, the clients will not notice it as messages can be routed through another path.

Gossip-Style Failure Detection

The hierarchical configuration seems to be a good choice for a few LANs working together, however, it still does not solve our problem of larger scale networks, such as WANs or over the Internet distributed work.

Gossiping in distributed systems is the repeated probabilistic exchange of information between two members [6]. The first use of Gossiping in distributed systems first appeared in [8, from 6].

Gossiping dynamically changes information among peers. Each peer has a cache/list of other peers. Traditionally gossiping in distributed systems has been used to disseminate information/etc to other peers [6]. Most gossiping approaches have the following three tasks:

1) Peer Selection: In this task, each peer must choose some peers to send data to. This could be on a schedule, or it could be done randomly.

2) Data Exchanged: The peer sending the data, must select some data to send to the peers it has chosen.

3) Data Processing: The peer at the receiving end decides what to do with the data sent from other peers [6].

In our case, we are interested in the use of gossiping for failure detection. In [2], they propose a Gossip-Style failure detection mechanism that we will look at here.

In gossips, a member sends information to randomly chosen members [2]. Their gossips and gossips in general mix the efficiency of hierarchical dissemination and the robustness of flooding protocols [2]. Their protocol gossips to figure out who else is still gossiping.

Each member maintains a list of each known member, its address and a heartbeat counter that will be the basis of judgment of failure. The heartbeat counter is mapped to the member each member in the list. Every Tg seconds, each member increments its own heartbeat and selects members to send its list to. Receiving members, will then merge the arrived list with their own list, and adopt the maximum heartbeat of each member in the list. Each member also keeps track of the last time a members heartbeat was incremented. If the heartbeat of a member has not increased in Tf (T fail) seconds, than that member is suspected for failure. However, given that it might take some time before a member might get an update, or one member has passed Tf of another member, but others might not necessarily have done so, they introduce another time variable Tc , such that they once they past this time, they can have greater confidence in the failure of a suspected node.

Each member gossips at regular intervals, but these intervals are not synchronized with each other.

The above mentioned is the core of the algorithm, in brief, each member has a list of other members with a heartbeat counter, once every few seconds, it randomly chooses other members to gossip its new list to and increments its own heartbeat. At the receiving end, the member merges his list with the incoming list. If for a given member, a heartbeat is not incremented with an adequate time, then that member will be suspect for failure.

The paper then goes on analyzing their proposed scheme and calculating error detection time in different scenarios and also playing around with parameters. They investigate against; number of failed members, number of mistakes and against probability of message lost. They also investigate different what values they should choose for Tc, Tf with respect to the number of members, expected failure rate, expected message lost etc.

Expanding the Gossip (Multi-level Gossiping)

Their scheme so far works well for a subnet setting. They expand their gossiping scheme to a multi level gossiping scheme. To avoid using too much bandwidth, most gossiping is done in the same subnet, with few gossiping messages done in between subnets, and even fewer between domains. Their protocol wants to have on average one member per subnet to gossip another member in another subnet in each round. To achieve this, every member tosses a weighted coin every time it gossips. One out of n times, where n is the size of the subnet, it picks a random subnet within its domain, and random host to gossip to. Then the member tosses another coin to choose another domain.

In [3], they draw a diagram in which they mix both the hierarchical model and the [2]’s gossip model. The figure is as follows:

There have been other work done in gossip-style failure detection [15].

The Accrual Failure Detector

In their paper N. Hayashibara et al. in [5] present a new approach to failure detection. They argue that it is difficult to satisfy several application requirements simultaneously while using classical failure detectors. They say that maintaining a certain level of quality-of-service on different requirements and at the same time performing failure detection must still allow tuning of services to meet their needs. They introduce the accrual failure detectors that are not based on a Boolean; either a process is a) correct/working b) suspected.

In the accrual model, a monitor will output a value on a continuous scale rather than Booleans. In this protocol, it is the level of confidence in a suspicion that changes. A process’s level of suspicion increases over time by not receiving on time heartbeats. Their protocol samples heartbeats coming from different hosts, analyzes it, and uses that info to predict what pattern the next heartbeats will follow.

They use a function, susp_level p(t) >= 0. This function will grow if the suspicion level of a process p increases. It will decrease if a process p is back up, and shouldn’t change much if it’s working.

There has been some more work done in this field such as [10].

Further Work

There has also been other work done in this field that I did not get a chance to deeply analyze. Such works include quality of Service of failure detectors [9].

There has also been work done on dynamic distributed systems. N. Sridhar in his paper [11] present local failure detectors that can tolerate mobility and topology changes.

There is also work done in distributed wireless networks [14].

Conclusion

In my literature review, I talked about some work that had been done in the past, introduced some protocols that are used among processes to detect failures then expanded it to wider area networks with more sophisticated protocols and methods that I learnt through out reading different papers.

References

[1] W. Vogels. World wide failures. In proceeding of the ACM SIGOPS 1995 European Workshop, 1995.

[2] R. Van Renesse, Y. Minsky, M. Hayden, A gossip-style failure detection service. In proceeding of the IFIP International Conference on Distributed Systems Platforms and Open Distributed Processing, 1998. The version used here is from 2007.

[3] P. Felber, X. Defago, R. Guerraoui, and P. Oser. Failure detectors as first class objects. In Proceedings of the International Symposium on Distributed Objects and Applications, 1999. The version I used here is from 2002.

[4] N. Hayashibara, A. Cherif, T. Katayama, Failure Detectors for Large-Scale Distributed Systems, In 21st IEEE Symposium of Reliable Distributed Systems (SRDS’ 02). 2002

[5] N. Hayashibara, X. Defago, R. Yared, T. Katayama. The φ accrual failure detector. In Proceedings of the 23rd IEEE International Symposium on Reliable Distributed Systems (SRDS’ 04), pages 55-75, 2004

[6] A. Kermarrec, M. van Steen, Gossiping in Distributed Systems, ACM SIGOPS Operating Systems Review. 2007

[7] J.Fisher, N. Lynch and M. Paterson, Impossibility of Distributed Consensus with One Faulty Process, Journal of the ACM, 1985

[8] A. Demers, D. Greene, C. Hauser, W. Irish, J. Larson, S. Shenker, H. Sturgis, D. Swinehart, and D. Terry. “Epidemic Algorithms for Replicated Database Maintenance.” In Proc. Sixth Symp. on Principles of Distributed Computing, pp. 1-12, Aug. 1987. ACM.

[9] W. Chen, S. Toueg, M.K. Aguilera, On the Quality of Service of Failure Detectors, IEEE transactions on Computers, 2002

[10] N. Hayashibara, M. Takizawa, Design of a Notification System for the Accrual Failure Detector, 20th International Conference on Advanced Information Networking and Applications – volume 1 (AINA’ 06). 2006

[11] N. Sridhar, Decentralized Local Failure Detection in Dynamic Distributed Systems, In Proceedings of the 25th IEEE Symposium on Reliable Distributed Systems. 2006

[12] T. Chandra, S. Toueg. Unreliable failure detectors for reliable distributed systems. Jouranl of the ACM. 1996

[13] T. Chandra, V. Hadizlacos, S. Toueg, The Weakest Failure Detector for Solving Consensus, Journal of the ACM (JACM), 1996

[14] J. Chen, S. Kher, A. Somani, Distributed fault detection of wireless sensor networks, In proceedings of the 2006 workshop on dependability issues in wireless ad hoc networks and sensor networks DIWANS ’06, 2006

[15] S. Ranganathan, A. George, R. Todd, M. Chidester, Gossip-Style Failure Detection an Distributed Consensus for Scalable Heterogeneous Clusters, Cluster Computing, 2001