DistOS-2011W FWR

Introduction

The idea behind FWR (First Webocratic Republic) is simple: to create a self-governed community on the web.

The first approach was natural: write a CMS where users can register (become citizens of FWR), communicate freely, elect leaders and be elected. The main goal of such a community is to survive, so some source of income is needed to pay hosting provider. CMS needed to have two parts – public and for-citizens-only. Citizens elect leaders, who decide on the strategy of content-generation, then everybody work on public part (tourist site) to attract visitors and get money by ads, referral links, etc. This approach can't be described as fully democratic, since the owner of root-password on the server is still in complete, god-like power over the FWR.

To fix this issue, another idea was added: distribute the copy of the world (filesystem and DB) to all citizens in some p2p fashion (torrent, probably) every time government changes, so that if new government screws things up, everyone has a "backup world". This also contributes to overall distribution of FWR – every copy is fully-functional and can be set as a separate "country".

But this all wasn't distributed enough.

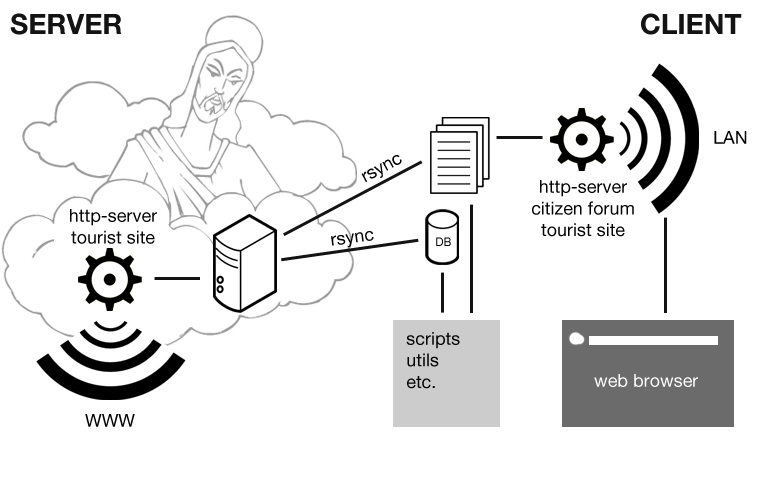

Here is the structure in mind prior to implementation:

CMS is running on the main server, but in read-only mode. The data (files, databases, etc) is synced by rsync from client machines. Clients are citizens, and only they can write to synced directories. Local http server on client machine runs fully-functional CMS which can be accessed locally. There is also a set of scripts to work with files, databases and rsync. Clients can sync with each other too.

So, even though we still have main server for public access, the system does not depend on it. Main server can be easily changed. System still runs being offline and tries to sync everything as soon as it gets back online.

Amount of governance needed has also changed: in the previous model elected leaders had access to server-files and databases, but now everyone has access to it. The only essential thing left is to moderate content being synced. This can be done by adding personal or global filters, not allowing particular people to sync with main server or with clients. Also, local and server-storage can be set as version control system, so that vandalism can be dealt with as in wikis. Moreover, every citizen can separate at any moment and run their own world.

This scheme can be a base of some collaboration system or just as a safe web-development environment.

Setting up

Using rsync both ways can lead to inconsistencies and errors, that's why another tool was chosen – unison. It allows to sync files on two machines by issuing one command on either machine. Unison can use sockets or ssh to transfer data and can be used together with SVN or any other version control system.

Central server

The following steps are required to set up a central server:

1. Install unison (Debian example):

apt-get install unison

2. Install open-ssh (Debian example):

apt-get install openssh-server openssh-client

Since we use ssh to transfer data, open-ssh should be installed on both server and client. Alternatively, sockets can be used. sshd daemon must be running now. If we want server to invoke synchronization, we need to generate keys and give public key to everyone, who wants to join the commmunity:

ssh-keygen -t dsa

File .ssh/id_dsa.pub is created. It is the public key.

3. Add user

adduser username /home/username

This user account is for FWR server only, it will run servers. Then, create a directory /home/username/fwr. This is where all synced data will be stored.

4. Install Apache (Debian example):

apt-get install apache2

5. Install inotify utility:

inotify-based utility will be monitoring our 'fwr' directory on server side and sync with all the clients on every modification. We could use incron, but it cannot monitor directories recursively. There is a tiny python utility called Watcher which uses Linux kernel's inotify via pyinotify Python module. Watcher supports everything incron does and adds recursive monitoring.

Install Python, pyinotify, python-argparse

sudo apt-get install python python-pyinotify python-argparse

Download and unpack Watcher

wget https://github.com/splitbrain/Watcher/tarball/master --no-check-certificate && tar zxvf master

Client

Client machine runs local FWR server and syncs data with central server. The following steps are required to set up a client machine:

1. Install unison (Debian example):

apt-get install unison

2. Install open-ssh (optional)

apt-get install openssh-client

Since we use ssh to transfer data, open-ssh should be installed on both server and client. Alternatively, sockets can be used.

3. Create a private key for passwordless connections:

ssh-keygen -t dsa

4. Copy the key to central server:

ssh-copy-id -i .ssh/id_dsa.pub username@remote.machine.com

This will allow to avoid entering password while connecting to central server, so that synchronization can be done seamlessly. Of course, it is safer to give the key to central server administrator (elected official) who will then upload it without sharing the password of 'username' account on central server.

5. Install Apache (Debian example):

apt-get install apache2

6. Install watcher (or another inotify-based utility) (optional):

Install Python, pyinotify, python-argparse

sudo apt-get install python python-pyinotify python-argparse

Download and unpack Watcher

wget https://github.com/splitbrain/Watcher/tarball/master && tar zxvf master

Configuration

Server

The following daemons should be running at all times on central server:

- sshd (open-ssh daemon allowing remote connections from clients)

- httpd (apache web-server)

- watcher.py (monitoring system, syncs data on every modification)

The following files should be present in /home/username on central server:

- fwr/www (citizen-site, not public)

- fwr/www_tourist (tourist-site, public)

- fwr_clients (list of clients' usernames and ip-adresses)

- .ssh/authorized_keys (clients' keys)

- .ssh/known_hosts (clients' hosts)

Apache

Add the following to httpd.conf:

Alias /fwr /home/username/fwr/www <Directory /home/username/fwr/www> Options FollowSymLinks AllowOverride Limit Options FileInfo DirectoryIndex index.php </Directory>

Alias /fwr_tour /home/username/fwr/www_tourist <Directory /home/username/fwr/www_tourist> Options FollowSymLinks AllowOverride Limit Options FileInfo DirectoryIndex index.php </Directory>

This can vary depending on CMS you want to use. Restart apache.

/etc/init.d/apache2 restart

Now the contents of /home/username/fwr/www (which is the main citizen site) is available at http://website.com/fwr, and contents of /home/username/fwr/www_tourist (which is public citizen site) available at http://website.com/fwr_tour, where 'website.com' is server's public domain name. The citizen site must be protected on server side, so appropriate settings in /home/username/fwr/www/.htaccess must be added and .htaccess file should be ignored at synchronization. This will be described later.

Much safer approach is to avoid putting fwr-citizen-site on public server altogether (as described in the general scheme in the beginning).

inotify + pyinotify

inotify-based utility will be monitoring our 'fwr' directory on server side and sync with all the clients on every modification. We could use incron, but it cannot monitor directories recursively. There is a tiny python utility called Watcher which uses Linux kernel's inotify via pyinotify Python module. Watcher supports everything incron does and adds recursive monitoring.

Install Python, pyinotify, python-argparse

sudo apt-get install python python-pyinotify python-argparse

Download and unpack Watcher

wget https://github.com/splitbrain/Watcher/tarball/master && tar zxvf master

Make the following changes to watcher.ini:

watch=/home/username/fwr events=create,delete,attribute_change,write_close,modify command=/home/username/fwr.sh csync

And run watcher as daemon:

python watcher.py start -c watcher.ini

Now fwr.sh bash script is executed with parameter 'csync' (clients sync) every time any modification occurs in 'fwr' directory.

Client

The following files should be present in /home/username on client:

- fwr/www (citizen-site, not public)

- fwr/www_tourist (tourist-site, public)

- .ssh/authorized_keys (clients' keys)

- .ssh/known_hosts (clients' hosts)

Apache

Add the following to httpd.conf:

Alias /fwr /home/username/fwr/www <Directory /home/username/fwr/www> Options FollowSymLinks AllowOverride Limit Options FileInfo DirectoryIndex index.php </Directory>

Alias /fwr_tour /home/username/fwr/www_tourist <Directory /home/username/fwr/www_tourist> Options FollowSymLinks AllowOverride Limit Options FileInfo DirectoryIndex index.php </Directory>

This can vary depending on CMS you want to use. Restart apache.

/etc/init.d/apache2 restart

Now the contents of /home/username/fwr/www (which is the main citizen site) is available at http://website.com/fwr, and contents of /home/username/fwr/www_tourist (which is public citizen site) available at http://website.com/fwr_tour, where 'website.com' is server's public domain name.

inotify + pyinotify (optional)

Make the following changes to watcher.ini:

watch=/home/username/fwr events=create,delete,attribute_change,write_close,modify command=/home/username/fwr.sh sync

And run watcher as daemon:

python watcher.py start -c watcher.ini

fwr.sh

This simple script will deal with synchronization with server or with clients.

#! /bin/bash

FWR_PATH='/home/username/fwr'

CLIENT_LIST='/home/username/fwr_clients'

FWR_SERVER_PATH='username@centralserver.com'

if [ ! $# == 1 ]; then

echo "Usage: $0 [csync | sync]"

fi

if [ $1 == 'csync' ]; then

if [ ! -f $CLIENT_LIST ]; then

echo "File not found!"

fi

cat $CLIENT_LIST | while read line; do

unison $FWR_PATH ssh://$line/fwr -auto -silent -batch

done

fi

if [ $1 == 'sync' ]; then

unison $FWR_PATH ssh://$FWR_SERVER_PATH -auto -silent -batch

fi

What now?

So, now we have some sort of pseudo-distributed web-server. Does it work? The system was tested in local wifi-network with the following setup:

- central server: Debian Squeeze + Apache + Pluck CMS

- 3 clients, each one: Debian Squeeze + Apache + Pluck CMS

Each client was running its own httpd and a every modification triggers fwr.sh script to sync with central server. This modifies data on central server, and these modifications trigger fwr.sh to sync with all clients consecutively. This configuration works pretty fast, but more increase in number of clients will lead to major time delays and potential inconsistencies.

Each client can create its own list of clients in 'fwr_clients' file and synchronize with them without touching central server by issuing 'fwr.sh csync'. Different topologies can be used depending on workload and frequencies of synchronizations.

To deal with inconsistencies and data vandalism, some version control system can be introduced and installed at least on central server, but ideally - on every client as well. It should be pretty straight-forward configuration and there are plenty of documentation on combining rsync or unison with SVN or other version control system. A much more obvious solution is to replace unison with git. Git can synchronize directories, but also keeps versions and branches, and able to merge two or more development histories. You need to install git-core and use something like this to pull in changes:

#!/bin/bash

# GLOBALS

sGitLogFile=~/common-git.log

sPathToLocalDir=~/common

sPathToRemoteGit=…

function doGitPull {

cd $sPathToLocalDir >> $sGitLogFile 2>&1

#pwd >> $sGitLogFile 2>&1

#whoami >> $sGitLogFile 2>&1

#printenv >> $sGitLogFile 2>&1

git pull –verbose $sPathToRemoteGit HEAD >> $sGitLogFile 2>&1

}

echo “{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{” >> $sGitLogFile 2>&1 # Time stamp the end of processing

echo $(date +%F[%T]) ” = STARTING TIME of pull” >> $sGitLogFile 2>&1 # Time stamp the start of processing

doGitPull

echo $(date +%F[%T]) ” = ENDING TIME of pull” >> $sGitLogFile 2>&1 # Time stamp the end of processing

echo “}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}” >> $sGitLogFile 2>&1 # Time stamp the end of processing

echo “” >> $sGitLogFile 2>&1 # Time stamp the end of processing

And something like this to push (sync):

#!/bin/bash

# GLOBALS

sGitLogFile=~/common-git.log

sPathToLocalDir=~/common

sPathToRemoteGit=….

function doGitPush {

cd $sPathToLocalDir >> $sGitLogFile 2>&1

git add –verbose -A >> $sGitLogFile 2>&1

git commit –verbose -m”bkup” >> $sGitLogFile 2>&1

git push –verbose $sPathToRemoteGit master –receive-pack=’git receive-pack’ >> $sGitLogFile 2>&1

}

echo “{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{{” >> $sGitLogFile 2>&1

echo $(date +%F[%T]) ” = STARTING TIME of common-push ” >> $sGitLogFile 2>&1 # Time stamp the start of processing

doGitPush >> $sGitLogFile 2>&1

echo $(date +%F[%T]) ” = ENDING TIME of common-push\n\n\n” >> $sGitLogFile 2>&1 # Time stamp the end of processing

echo “}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}}” >> $sGitLogFile 2>&1

These two scripts must be called consecutively from 'fwr.sh' instead of single unison call.

We already have a pretty useful system that suits at least one use-case: collaborative web-development. But how about democracy and republic? We still need some officials to be elected, but what should they do? Since everything now belongs to everyone, the only responsibility left is to look after central server. This job is important, but not crucial: even if elected official does a bad data synchronization and then issues "rm -rf /" on central server, the data is not lost and the system still works (especially if some interconnection between clients' exist as described above). So we can elect officials, but don't really worry about risks.

The only thing central server's needed for is, actually, internet-access to some data, generated by clients. But if the goal of community doesn't include this requirement, central server is no longer needed. It is important to choose the right of clients' interconnections in this case, but the good thing is: we no longer need officials!

Conclusions

I learned that many good and useful things can be built with present tools and technology. Things like Facebook, Twitter, Dropbox only support this thought – none of them contributed anything truly revolutionary technology-wise, yet they are very successful projects. I believe there are many possibilities of building great distributed systems, but it is only a question of engineering, not necessarily invention.

While setting this system up I understood, how easy it is to move towards more distributed configuration, and everyone should be able to do so, at least for the sake of data safety (backups). Even though the technology is here and it is available, people still trust companies or corporations to do this for them. There is no reason why is there are mediators between people and any useful technology.

In this particular instance, I can say that pseudo-distributed web-server works pretty well with small number of clients. Minor changes are being synced almost seamlessly, major changes from different contributors can be stored separately (assuming some version control system is in place) or merged. There are a lot of ideas to try under this setup. Potentially, if all the clients be visible on the internet under single name, then the web-server could really be distributed.

References

- Debian http://www.debian.org/

- Unison http://www.cis.upenn.edu/~bcpierce/unison/

- OpenSSH http://www.openssh.com/

- Apache http://www.apache.org/

- Watcher https://github.com/splitbrain/Watcher