Operating Systems 2014F Lecture 9

The audio from the lecture given on October 3, 2014 is now available.

EMbedded systems tend to be concurrent in some way. Tend to deal with real life events. That's where the most dangerous race conditions come up.

Therac 25 incident - classically comes up in ethics classes in computer science. Was a machine designed to give cancer patients radiation treatments. (kinda dangerous right?) - most of the way you control the radiation you get is through time. If you are exposed too long, you fry. There's intensity settings and time settings. The previous model to the therac 25 had hardware interlocks to enforce the proper ordering of operations. (hardware mechanisms to enforce things) It automatically shut off to ensure not too high a dose. That extra hardware is kind of expensive, so let's do those in software. In the older ones, they had done it in hardware and software. Therac 25 had race conditions, based on how the operator entered information into the machine would change how the system behaved. Turning the dial too fast, and there was no hardware interlocks, the software didn't do proper mutual exclusion, and patients got too much radiation.

Elevator in Hz crashes when it gets too many people hitting multiple buttons.

Properly enforce mutual exclusion. Trying to do things in parallel.

A race condition that normally wouldn't

More interrupts happen than you expect. The stuff we are talking about with concurrency is important and dangerous. This is not a problem that we have solved in general. (the same reason we have not solved memory leaks.) The clean way to do this is to grab a lock in the critical section. Programming language design, why don't you make it easy for me? In java they have this. The conceptual term is called monitors, but in java it's called synchronized. Most code is not written in java. These problems we keep thinking we've solved them, but we haven't solved them. This deals with the problem of race conditions with shared memory. You can have race conditions in distributed systems even if you are using monitors. This is only going to get worse.

Concurrency is everywhere. Long term there is only one real way around this problem. Simple enforce exclusive access to data. What is programming without side effects? Functional programming gets rid of most concurrency issues. Because you aren't accessing the same data at the same time. You as a developer do not. That's why in large distributed systems you see functional programming come in. These ideas are becoming more and more important. In the tutorials, what you are going to see is simulators (x86 simulators). This is simplified. This simulator does not do full x86 stuff, but the instructions should look familiar. It shows you the execution of multiple threads at the same time.

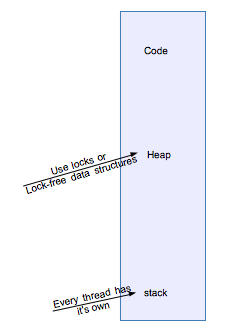

When you are talking about threads, all you are talking about is different cpu contexts. When you have a multithreaded process, you have more than one context. It has to deal with the cpu context of each process, and of it's own state. Don't really talk about the kernel being multithreaded. When you deal with the operating system you deal with registers. How do you share a cpu with multiple contexts?

Thread = OS abstraction for CPUs

By default your process has single threaded, that means it gets one cpu. If you want to access more than 1 cpu at a time, than you have to use multithreading.

How do we share the stack? They each get their own stack, we don't share the stack. The stack defines the state of the thread. Where do I store where I was? I push it onto the stack. The stack is where I keep the context for the current execution. You have multiple stacks.

In older varients of unix, you had fork() and vfork() <- for running children that would then run execve. You dont' bother copying the address space, because we are going to throw it away anyway. You do a vfork() you don't do the copy. vfork() means child runs execve, then the parent runs. THe parent does not run, while the child is running in the parent's address space. The parent goes to sleep you get a new cpu context, the child runs a bit, you go until the execve, if the child messes up the address space for the parent, the parent will see it. Nowadays, forget about it, we do not use vfork. It's because we figured out how to make forks fast, forks are so fast, we don't care about trying to do these sort of things. Copying all the memory, if I do the fork, I logically have to copy a gig of data, now it happens really fast. What's the solution? the trick is to be lazy. Procrastination, how can you procrastinate the work? Only copy what you have to copy. Mark all of memory as read only. Then whenever a write happens, you copy. Only copy memory when you write to it. As long as you don't write to it, you can share memory. You have two processes that logically are just copies of each other, but in practise they share memory. If either one writes to it you make a copy of it.

Given the fact that fork is cheap, that you can duplicate an entire address space very quickly, there isn't that much difference between running multiple threads in a given address sapce and running separate processes. When do you want to have multiple threads? What is the basic use case for that. It's not for having things run on multiple CPUS. Why deal with all of this? There are two basic reasons to have threads: 1) To share data 2) I/O

Let's say you have a very large data structure on that you want to do computation on with 16 cpus, you have to potentially do some locking, but I want 16 CPUs to access that data at the saem tiem, then you want to share that memory, then you do multithreading. The more common case is for I/O - On older macintosh systems - mac os 9,8,7 if you were holding down the mouse button, and held it down for a long time, the computer would drop packets coming in over the network. Nothing would happen on the computer while you held the mouse down. In general do you want your computer doing computations while you are scrolling through it? yes, you want it to do more than one thing. One thread has to pay attention to all the events happening with what you are doing to it. Someone else has to render the page. Another thread has to deal with the network. Web browsers are highly concurrent systems, and they are all multithreaded to some degree. Modern browsers use multiple processes to ensure security. modern browsers starting with chrome, but also firefox and IE. IF you mess up things in one,

Oh Snap, a process crashed. Does Firefox go oh snap? nope they crash, the entire browser crashed, because it was only one process. Chrome is separated into multiple processes so corruption in one, doesn't result in crashes of the entire program.