Operating Systems 2014F Lecture 7

Segments - Absolute vs. relative addresses, and base + bound registers

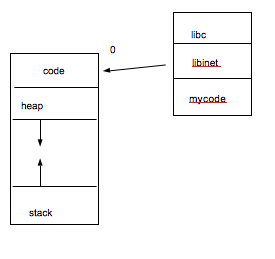

Modern systems do not do static linking, instead they do dynamic linking.

- fPIC

ASLR

dynamic linking at compile time

- insert stubs

at runtime - mmap libraries - update tables

Wonder what system calls happen when I run a statically linked version of the program. Multithreaded support, there are some system calls that happen, no matter what that's there to set the thread state, even if your program isn't multithreaded. Complicated. Key things in the tutorial today: looking in the face of some of this complexity. ok what does the assembly look like. What does the object code look like, what is this linking that happens? Do expect you to understand a couple of key bits, and why it is all there. The main idea here is dynamic linking.

One real benefit of having a library being loaded dynamically, it will be mapped into different addresses, and different address spaces for different processes. You might have 100 copies, how many copies of the c library is loaded into memory. When you mmap a file into one process, and you mmap it into another process, it doesn't take any additional memory. The data is the same. The position in memory will be different for different processes. That's when you talk about shared libraries. We are talking about shared in memory. THat means you can have these very big libraries loaded in. Only the parts of the library that are loaded are those that are referenced. The other part is stored on disk. As a user space programmer you don't have to worry about this. If you do low level programming, you will see segments.

Why do we have this model of how the program works? Somehow it's historic. Forget this whole virtual address space. We are doing the redirection inside the address space. Why don't we just access the physical memory. You could still keep things separate. That's not how most of the code we have that expects how the world to work. It's done, now it runs all the code.

the mental model you have in your head: while there are absolute addresses, much of the code in data we reference, are not in terms of absolute addresses, they are in terms of relative addresses. There is some sort of base, a base address, this is added to the relative address.

for a "segment"

base + offset (relative) => absolute address relative - addresses that you see

you have to have the name of the segment, know which segment you are talking about. Which register you are using relative to the base address. Whatever data we want to access. We keep one register with the base address of the data segment. This is the stuff the compiler worries about and we don't.

Can you run out of virtual memory? You don't have room to allocate anything because your address space is full? It is possible. The 32 bit address space only has 4 GB. Not that much space. We are moving towards / we have 64 bit address space. It's not like you would actually allocate that much memory.

This is the low level mechanism, - base and bounds. One thing to keep in mind about segments - the size of the segment is variable. The range of valid offsets may vary in size alot. There is a different size to each segment, when you load it into memory, you have to keep in mind the size.

None of this has anything to do with physical addresses. These are absolute virtual addresses. Before computers used to run one program at a time in a single address space. It is easier to emulate having multiple physical computers in a sense, then to have everyone share a computer.

You want multiple address spaces you have multiple computers, with processes talking to each other. But then you are talking about networking.

For example, what if you are talking about a java vm, it runs inside of a process, inside a virtual address space, it's implementing it's own machine code. With it's own memory model, with it's own address references. Java programs refer to addresses inside of them. At it's base level, the only addresses you will see are virtual addresses, they will kind of act like physical addresses, physical addresses, every address is valid. Virtual addresses, is that address actually valid. You won't get seg faults on physical memory. Maybe you try to access an address for which you don't have actual ram for, you only have 4 gigs of ram, you are done. Then you don't get into segmentation violation. I don't have that address, that ram does not exist.

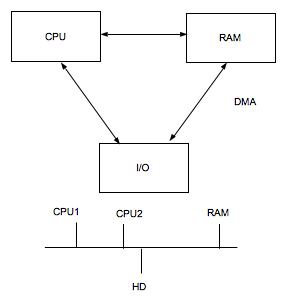

Regular programs won't see that. Normally it generates an error - maybe a bus error. very few modern systems have buses, instead they have point to point connections. A bus enables shared communication. Every processor has a direct line of communication to each of the other processors. Potentially they will have a shared bus of some kind, because there is this idea of bus snooping.

What is a cache? Cache memory - storage of supplies, when arctic explorers what they might do - is depend on there being caches on the way. Some places in the area, there would be caches of material. That way you don't have to carry all of that stuff with you. What you can do is drop off material 100 miles in, 200 miles, in leave stuff along the way, all you need is just enough supplies to get there. You can lower the amount of stuff you have to carry with you. (Ididerod)

computer version of cache: Frequently used code and data (Code and data locality) you don't access these randomly in a program. random = slow. The entire architecture is structured around the fact that code and data have high locality in time and space. If you access one bit of code/data, you will probably access it again sooner rather than later. In order to exploit code and data locality, modern systems implement a memory hierarchy.

What's the fastest memory on the computer? Registers

hardware manages the top part of the hierarchy

os manages the lower part.

parts of a programs virtual address space will reside in registers, the process is spread all throughout the memory hierarchy. When it's loaded where, depends on the systems ability to predict the data locality. kind of a scheduling problem. If you want to access a variable and it is sitting there in main memory, it needs to be loaded into a register. if you have to wait on ram before you load the data in the register, it will take a long time. If it's sitting in L1 cache, then it's accessed much faster. How does this relate to the address space. When you talk about loading segments, bases, and bounds, we organize things in terms of the segments, because we want to maximize the code and data locality, because the closer we put things that we access frequently, the faster the system will perform.