DistOS-2011W BigTable: Difference between revisions

No edit summary |

No edit summary |

||

| Line 22: | Line 22: | ||

* High availability | * High availability | ||

Bigtable is in some ways similar to a traditional database. Data is accessed using unique row, column as well as time identifiers. The data itself is seen as strings, but quite often serialized objects are stored in these strings for more complicated structures. | |||

[[File:rowdatastrings.png|alt=Table Data alt text|Table Data]] | [[File:rowdatastrings.png|alt=Table Data alt text|Table Data]] | ||

| Line 62: | Line 62: | ||

=Evaluated Systems/Programs= | =Evaluated Systems/Programs= | ||

The installation of Hadoop is quite straight-forward. After unzipping the compressed Hadoop folder, two files needed to be edited: | |||

* conf/hadoop-env.sh | |||

* bin/hadoop | |||

For the first file, find the following line: | |||

# export JAVA_HOME=/usr/lib/j2sdk1.6-sun | |||

and change it to: | |||

export JAVA_HOME=/System/Library/Frameworks/JavaVM.framework/Versions/1.6.0/ | |||

For the second file, find the following line: | |||

JAVA=$JAVA_HOME/bin/java | |||

and change it to: | |||

JAVA=$JAVA_HOME/Commands/java | |||

The Hadoop installation is now complete and we can now dive into the API’s and creating a project. | |||

I looked at two possible deployment options; one in a local standalone mode and another in a Pseudo-Distributed mode. The first is very simple and involves | |||

[[File:transformurl.png|thumb|alt=Reordering URLs alt text|Reordering URLs]] | |||

Describe the systems individually here - their key properties, etc. Use subsections to describe different implementations if you wish. Briefly explain why you made the selections you did. | Describe the systems individually here - their key properties, etc. Use subsections to describe different implementations if you wish. Briefly explain why you made the selections you did. | ||

| Line 88: | Line 116: | ||

Chang F. et al. Bigtable: A Distributed Storage System for Structured Data. Google Inc. (February 11, 2011) [http://static.googleusercontent.com/external_content/untrusted_dlcp/labs.google.com/en//papers/bigtable-osdi06.pdf] | Chang F. et al. Bigtable: A Distributed Storage System for Structured Data. Google Inc. (February 11, 2011) [http://static.googleusercontent.com/external_content/untrusted_dlcp/labs.google.com/en//papers/bigtable-osdi06.pdf] | ||

Computing and Information Science. Cornell University. (February 23, 2011) [http://www.infosci.cornell.edu/hadoop/mac.html] | |||

Distributed Systems. Google Code University. (February 23, 2011) [http://code.google.com/edu/parallel/] | Distributed Systems. Google Code University. (February 23, 2011) [http://code.google.com/edu/parallel/] | ||

Hadoop. Wikipedia. (February 23, 2011) [http://en.wikipedia.org/wiki/Hadoop] | Hadoop. Wikipedia. (February 23, 2011) [http://en.wikipedia.org/wiki/Hadoop] | ||

Revision as of 16:57, 1 March 2011

Introduction

Bigtable is a distributed storage system used at Google. Its main purpose is to have an enormous amount of data scale reliably over a large number of computers. Distributed transparencies are of key importance in the overall framework.

Projects and applications that require a large amount of storage space for data are well suited for this type of system. Some projects from Google that use Bigtable include: Google Earth, the Google web-indexing engine, and the Google App Engine. Ultimately these applications will store different types of information, from URLs, web pages, and satellite images. Some of these applications require low latency and while others require a high-throughput of batch-processing.

The design and framework of Bigtable is of considerable interest, however, it is not open source and accessible to the general public. Clearly, this is a problem for an implementation report, so we will use Apache’s open-source implementation Hadoop to get an understanding of how to configure, run, and deploy applications across the system. Throughout the rest of the paper, we will contrast and comment on the similarities between the two platforms.

Real World Implementations

Bigtable is a proprietary system at Google, so it is currently only used and implemented by them. There are, however, other implementations that exist in the open source world; notably Hadoop, which is Apache’s implementation of Bigtable. It is based on the papers released by Google on their MapReduce and Google File System (GFS). Their framework allows applications to be run on large clusters of commodity hardware. Some organizations that make significant use Hadoop are: Yahoo, eBay, Facebook, Twitter, and IBM.

In the next two sections, we discuss the features and underlying’s of the two frameworks.

Google’s Implementation

Bigtable was designed with the following in mind:

- Wide applicability

- Scalability

- High performance

- High availability

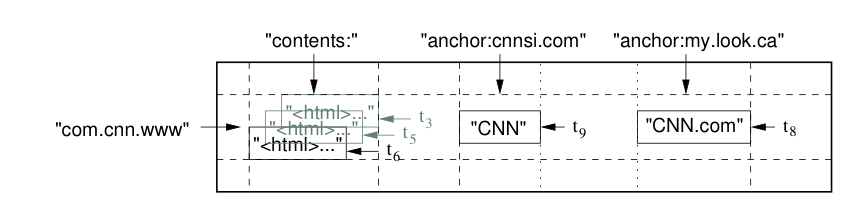

Bigtable is in some ways similar to a traditional database. Data is accessed using unique row, column as well as time identifiers. The data itself is seen as strings, but quite often serialized objects are stored in these strings for more complicated structures.

Read and write operations are both atomic for simplicity in the case of concurrent access.

Structure of Data

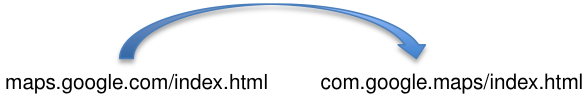

Since the data is distributed over many computers, related data can become disjoint in terms of location. These related data sets are known as tablets and correspond to a row key. When these tablets correspond to only a small number of computers, the reads become more efficient. In many situations, such as URLs for web indexing, the hostname parts are reordered to allow pages from the same domain to be lexicographically grouped together and in-turn speed-up searches.

Individual column identifiers on the other hand, are part of what’s known as a column family. Data within a specific family are usually of the same type and are compressed together. Within each of these families there can be many columns. These groupings are useful for situations such as authorization of users; some users have more privilege and can view more data than others. In this case, a user with limited access would only have access to a select few column familes.

Along with row and column identifiers, there are timestamps. These allow for different versions of potentially the same data. Timestamps are usually set automatically by the system, but can also be manually set by applications as a 64-bit number.

Garbage Collection

Bigtable also provides a garbage collection feature. For example, cells with multiple versions can be truncated to store only the last n versions or only the versions that were added within the last three days.

Apache’s Implementation – Hadoop

The Hadoop framework utilizes Google’s Map/Reduce algorithm to separate an application into many small components that can be run and executed across multiple machines.

Components

A typical Hadoop installation consists of

- Hadoop Common

- Access to the filesystems of Hadoop

- Files to start Hadoop

- Documentation, source code

- HDFS

- Distributed, scalable, portable file system written in Java

- Uses TCP/IP for communication

- Clients use Remote Procedure Calls (RPC) to communicate to each other

- Splits data across multiple machines

- Usually replicates data on 3 nodes: 2 on the same rack, 1 on another rack

- Single Master and multiple Slave nodes

Evaluated Systems/Programs

The installation of Hadoop is quite straight-forward. After unzipping the compressed Hadoop folder, two files needed to be edited:

- conf/hadoop-env.sh

- bin/hadoop

For the first file, find the following line:

- export JAVA_HOME=/usr/lib/j2sdk1.6-sun

and change it to:

export JAVA_HOME=/System/Library/Frameworks/JavaVM.framework/Versions/1.6.0/

For the second file, find the following line:

JAVA=$JAVA_HOME/bin/java

and change it to:

JAVA=$JAVA_HOME/Commands/java

The Hadoop installation is now complete and we can now dive into the API’s and creating a project.

I looked at two possible deployment options; one in a local standalone mode and another in a Pseudo-Distributed mode. The first is very simple and involves

Describe the systems individually here - their key properties, etc. Use subsections to describe different implementations if you wish. Briefly explain why you made the selections you did.

Experiences/Comparison (multiple sections)

In multiple sections, describe what you learned.

Discussion

What was interesting? What was surprising? Here you can go out on tangents relating to your work

Conclusion

Summarize the report, point to future work.

References

Apache Hadoop. Apache. (February 23, 2011) [1]

Apache Hadoop Wiki. Apache. (February 23, 2011) [2]

Bhandarkar, M. Practical Problem Solving With Apache-Hadoop. Yahoo Inc. (February 11, 2011) [3]

BigTable. Wikipedia. (February 15, 2011) [4]

Chang F. et al. Bigtable: A Distributed Storage System for Structured Data. Google Inc. (February 11, 2011) [5]

Computing and Information Science. Cornell University. (February 23, 2011) [6]

Distributed Systems. Google Code University. (February 23, 2011) [7]

Hadoop. Wikipedia. (February 23, 2011) [8]