DistOS-2011W Cassandra and Hamachi: Difference between revisions

No edit summary |

|||

| Line 1: | Line 1: | ||

=In the beginning= | =In the beginning= | ||

The Internet has seen remarkable growth over the last few years, both technologically and socially. The demand for real-time information has increased at exponential rates and has put existing information systems to the test. For this paper I decided to look at two new software projects that have emerged over the last few years: [[ | The Internet has seen remarkable growth over the last few years, both technologically and socially. The demand for real-time information has increased at exponential rates and has put existing information systems to the test. For this paper I decided to look at two new software projects that have emerged over the last few years: [[Cassandra]] and Hamachi. Cassandra is a distributed database that evolved from the needs of websites such as Twitter and Facebook, whose need for frequent and short updates was too taxing on standard RDBMSs. Hamachi, which is in part a commercial project now, started as an open-source project aimed at creating zero-config VPNs. The project is now part of LogMeIn but is still free to use for non-commercial purposes. | ||

In normal implementation circumstances you would not find these two projects paired together, but the concept of having a distributed database over a reasonably secure connection was one I thought was worth exploring. Over the course of this paper I'll discuss both projects in a reasonable amount of detail before detailing my implementation experiment and experience. | In normal implementation circumstances you would not find these two projects paired together, but the concept of having a distributed database over a reasonably secure connection was one I thought was worth exploring. Over the course of this paper I'll discuss both projects in a reasonable amount of detail before detailing my implementation experiment and experience. | ||

Revision as of 02:27, 1 March 2011

In the beginning

The Internet has seen remarkable growth over the last few years, both technologically and socially. The demand for real-time information has increased at exponential rates and has put existing information systems to the test. For this paper I decided to look at two new software projects that have emerged over the last few years: Cassandra and Hamachi. Cassandra is a distributed database that evolved from the needs of websites such as Twitter and Facebook, whose need for frequent and short updates was too taxing on standard RDBMSs. Hamachi, which is in part a commercial project now, started as an open-source project aimed at creating zero-config VPNs. The project is now part of LogMeIn but is still free to use for non-commercial purposes.

In normal implementation circumstances you would not find these two projects paired together, but the concept of having a distributed database over a reasonably secure connection was one I thought was worth exploring. Over the course of this paper I'll discuss both projects in a reasonable amount of detail before detailing my implementation experiment and experience.

Cassandra

Cassandra is a distributed, decentralized, scalable and fault tolerant database system (or at least it claims to be) currently under development by the Apache foundation. It shares some features from both the Bigtable and Dynamo projects, but has more focus on decentralization. It sports a tuneable consistency and replication system which, together with a flexible schema system, can quickly adapt to a site or project's growing needs. Well, enough salesman talk, let's get to the details.

Over the course of this subsection I'll be covering some of the basic concepts of Cassandra and how they contribute to the "bigger picture", as well as some implementation and installation details.

The Data Model

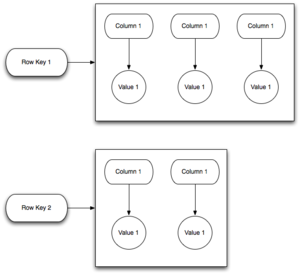

Cassandra takes a different approach compared to RDBMSs when it comes to how data is conceptualized and managed. In a standard RDBMS, data is normalized into a series of tables with a set number of columns. For the most part, the types and number of these columns will only rarely change as the need arises. For a later analogy, we'll refer to this model as a "Narrow Row" model. Cassandra operates on a system of Column Families which are analogous to a tables in an RDBMS, but is much more flexible. Column Families (hereafter referred to as CFs) are a loose grouping of common data keyed by some value for each row in question and are grouped together into Keyspaces. However, as opposed to the static feel of a RDBMS table, the number of columns or even the types of columns can and will change under Cassandra. That isn't to say there is no form of schema in Cassandra, because there is a simple type-enforcement schema system in place for each CF. To complete the analogy, the model for Cassandra can be seen as "Wide Row", with the number of columns growing however you please. Different rows in the same CF can even have a different number of columns. Internally, Cassandra uses a "always appending" model when writing data to disk, similar to Google's Bigtable or GFS.

The downside to such a data model is that Cassandra has no actual query language. There is no equivalent to SQL in Cassandra. There is also no support for referential integrity, either. With such circumstances maintaining data normalization while keeping processing time to a minimum is extremely difficult and is actively frowned upon. That's right, data duplication is encouraged when working with Cassandra. Luckily, the latest version (0.7.0 as of this writing) has introduced indexes to the meta-data that can be assigned to a column. This allows for basic boolean logic queries can be constructed to filter results better.

Scaling

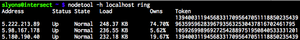

While Cassandra can operate on a single machine the power lies in setting up a Cassandra cluster. Each instance is referred to as a node within the cluster. In Cassandra nomenclature the nodes form a ring and divide the keyspace amongst all of the nodes based on a specified partitioner. There are a number of partitioners available, including some that take physical location into account.

Fault Tolerance

Cassandra employs two protocols that make up it's fault tolerance system, Gossip and Handoffs. Gossip is an extension of the usual Heartbeat model of monitoring other servers, and involves a full TCP style SYN/ACK/SYN+ACK process to verify the alive-ness of another node in the ring. When a node attempts to contact another and fails, it "Gossips" this fact to the others. Using a special accrual method for detecting downed nodes the ring can keep track of problem areas and adjust accordingly. The second half of this fault tolerance suite is the Handoff protocol. When a write destined for a downed node is received by the ring, other nearby nodes will accumulate and keep these entries until the downed node is restored. During startup a node will check if any outstanding handoff messages are destined for it and import them promptly.

Replication

One of the nice features of Cassandra is a built-in Replication system. When a Keyspace is created the replication factor dictates how many copies of column data will be spread out over the ring. When writing or reading data to the nodes the clients can specify the desired consistency level that is to be observed relative to the Keyspace's replication factor. Like most distributed databases, Cassandra purports eventual consistency.

Consistency

- ONE consistency

- The client will block until the first node available responds to the request.

- QUORUM consistency

- The client will block until a majority of nodes agree on the data to return.

- ALL consistency

- The client will block until all nodes would return the same data

I'll give an example of the impacts of the various replication levels later in my implementation report.

Other options

Cassandra shares some common ground with a number of other projects:

- Bigtable

- Dynamo

- Riak

- CouchDB

Evaluated Systems/Programs

Describe the systems individually here - their key properties, etc. Use subsections to describe different implementations if you wish. Briefly explain why you made the selections you did.

Experiences/Comparison (multiple sections)

In multiple sections, describe what you learned.

Discussion

What was interesting? What was surprising? Here you can go out on tangents relating to your work

Conclusion

Summarize the report, point to future work.

References

Give references in proper form (not just URLs if possible, give dates of access).